|

8/18/2022 Can something be False but Not Fake? Taking a Look at the Images from the James Webb Space Telescope, Geiger Counters, Your Brain, and the Amazing Realm of PerceptionRead NowMany of us are were awed by the release of the first pictures taken with the James Webb Space Telescope (JWST). The telescope’s crystal-clear images identified previously unseen galaxies, which formed just a few hundred million years after the Big Bang, giving a us a closer glimpse of the early universe. It also revealed many new instances of gravitational lensing, a phenomenon predicted by Einstein, where a strong gravitational field bends light. And it identified many stars in the process of formation enveloped in clouds of dust and gas exposed to titanic forces unleashed by galaxy collisions or the explosion of older stars. However, not everyone was thrilled. A group of skeptics started arguing that the photos were fake, and the fact that the first photo of the JWST was unveiled by President Biden in a ceremony at the White House provided the politization element. Someone also pointed out that the name of the galaxy cluster featured in the first image, SMACS 0723 (which stands for Southern MAssive Cluster Survey), reads “SCAM” when spelled backwards. Conspiracy theories arose claiming that the fake images are a cover up and the telescope is really a spy satellite or a weapon of some sort. It also didn’t help that a scientist as a joke posted an image of a slice of a sausage and claimed that it was an image of a nearby star taken by the JWST. Additional confusion was caused by the information that the colors in the images were not the original colors (they were false colors!), and that the images underwent a lot of computer processing (manipulation, eh? nudge, nudge; wink, wink) before being released to the public. So there you have it. A presidential photo op, hidden word messages, false colors, computer generated images, fake science, and conspiracy theories. It’s déjà vu all over again! Shades of QAnon, the 2020 election lie, the 911 conspiracy, and the moon landing hoax. All this nonsense is of course, fiction. However, as it has been stated many times by many people, truth is stranger than fiction. There is a process called “transduction” where a signal of one type gets converted to a signal of another type. A classic example of this is a Geiger counter, where the signals produced by radioactivity (ionizing radiation) are converted (transduced) into sound by the sensors and electronics of the device. Radioactivity obviously does not make a sound. The sound is a false representation of the radioactivity, but this does not make the Geiger counter readings fake. This is because the sounds produced by the Geiger counter are correlated to the intensity and timing of the radioactive emissions. Thus, with the Geiger counter we can detect a phenomenon (radioactivity) that otherwise we cannot perceive with our senses. The same thing happens with the images from the JWST. The images we have seen were taken with the telescope’s infrared cameras. But the problem is that much in the same way that we can’t perceive radioactivity, we also can’t see light in the infrared range. If we were to look at an unprocessed photo generated from the data from the telescope, we would just see faint darks and greys. The infrared photos have been converted (transduced) to the visible range much in the same way that radioactivity is converted into sound by a Geiger counter. Colors have been assigned to these images in order for us to see them. So yes, the images we see are in false colors and have been processed by computers, but they are correlated to the realities that the JWST is imaging. Thus they are not fake. And in case anyone remains skeptical about this, just consider that YOU do this all the time. Say what? Yes, you, or I should probably clarify, your brain, transduces signals all the time. In other words, your brain constantly changes one type of signal into another. Let me explain. The light we see, the sound we hear, the odors we smell, the flavors we taste, and the things we touch are not sensed directly by our brains. They are sensed by receptors at the level of our eyes, ears, nose, tongue, and skin. These receptors then proceed to convert (transduce) these light, sound, odor, flavor, and touch signals into electrical signals. These electrical signals then travel to the brain through specialized structures in neurons called axons, and millions of these axons make up the cables that we call nerves. So when we are exposed to light, sound, odors, flavors, and things we touch, what the brain perceives is shown in the figure below. Those spikes in the image represent the electrical signals travelling down the axon of a neuron in time (the horizontal axis). This is the reality that the brain perceives. Not light, sound, odors, flavors, or the things we touch, but rather millions of these electrical signals arriving to it every second. Now, do these signals make any sense to you? Of course not! The signals have to be transduced. The brain does something similar to what the Geiger counter does or what scientists working with the JWST do. The brain processes the electrical signals coming from our eyes, ears nose, tongue, and skin and generates the sensations of sight, sound, smell, taste, and touch. These sensations are as false as the sound made by the Geiger counter or the color representations in the images of the JWST, but they are not fake in the sense that they are correlated to reality. So, for example, we cannot see the wavelength of the light that impacts our eyes, but our brain associates the wavelength of the light with colors in such a way that we perceive light of short wavelength as purple and light of long wavelength as red. This association of false brain-generated sensations with the realities around us also takes place for the senses of sound, smell, taste, and touch. So to wrap it up, what you see, hear, smell, taste, and touch is false, just like the sounds a Geiger counter makes or the color of the images of the JWST, but not fake, because these things are all correlated to reality. Welcome to the amazing realm of perception! The image of the trains of electrical impulses belongs to the author and can only be used with permission. The image of the Cosmic Cliffs, a star-forming region of the Carina Nebula (NGC 3324), is by NASA and the Space Telescope Science Institute (STScI), and is in the public domain.

0 Comments

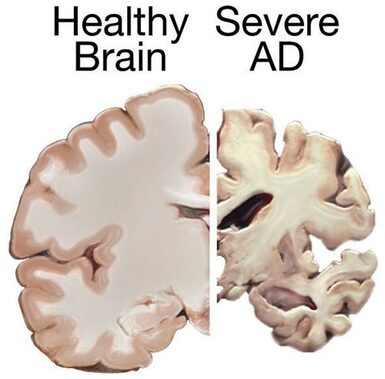

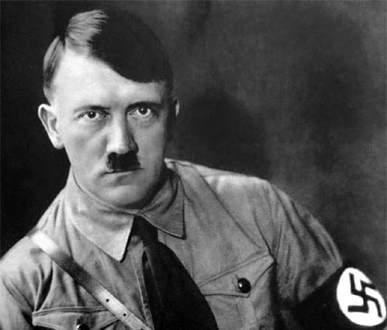

Scientists know that one important way to gain insight into how the human mind works is by observing what happens when it experiences a disfunction. In this regard, scientists have documented some rare but remarkable neurological conditions and diseases. The normal function of the brain may be altered as a result of a surgical procedure. Split Brain In the second half of the twentieth century, doctors carried out a radical surgery that severed the connections between the two hemispheres of the brain to treat uncurable seizures. While this procedure was effective at stopping the seizures, it would leave both brain hemispheres unable to share information and coordinate with each other. These split-brain patients have been objects of much research that has allowed scientists to gain insight into how the brain normally works. In humans, the right hemisphere receives nerve inputs from and controls the left side of the body, whereas the left hemisphere does likewise with the right half. With regards to vision, the left hemisphere receives information about the right side of the visual field (it cannot see the left side) with the opposite happening with the right hemisphere. Using this information, scientists were able to relay questions to the subjects and make them perform tasks in such a way that they could discriminate between the responses and performance of the right and the left hemispheres, and what they found was amazing. They discovered that the two hemispheres often displayed separate personalities and held different beliefs with one split brain patient famously answering the question “Do you believe in God?” with a “Yes” by one hemisphere and a “No” by the other. They also found that the communication between both hemispheres was required for exercising normal moral judgement in fast answers to specific questions. The normal function of the brain may also be altered as a result of a disease or a traumatic event. Cotard's Syndrome This one is also called, “The Walking Corpse Syndrome” because the individuals afflicted by it develop what are called nihilistic delusions in which they perceive themselves, or some of their body parts, to be dead, dying, or to not exist at all. People with this affliction will reduce food intake or stop eating because they have no use for this activity as they believe themselves to be dead after all. Some patients will spend an inordinate amount of time in cemeteries. Cotard’s Syndrome is often associated with other conditions ranging from severe depression to neurological conditions and diseases. Alien Hand Syndrome In this curious disease, one of the hands of a person will start moving on its own without the person being able to control it. The hand will perform purposeful tasks sometimes repeatedly, and may even antagonize things the other hand has just done such as buttoning a button. Alien Hand Syndrome is associated with conditions that cause trauma to the brain and neurodegenerative diseases. Dissociative Identity Disorder This disorder, which in the past was called Multiple Personality Disorder, is a situation where the identity of a person is split among at least two separate identities that take control over the individual. Each identity may have their own name, sex, race, and psychological and physical characteristics. Dissociative Identity Disorder is associated with the suffering of psychological trauma specially during childhood. Apotemnophilia People affected with Apotemnophilia have an overwhelming desire to amputate their limbs, with some expressing a wish to be paralyzed. Some of the afflicted individuals perform the amputations themselves or ask their friends, relatives, and health practitioners to help them with the process. Although scientists still don’t know what causes the condition, a possible explanation is a faulty representation of the limbs in the areas of the brain that deal with self-recognition of body structures. Boanthropy, Clinical Lycanthropy, and Clinical Zoanthropy Boanthropy is a condition where the affected persons believe themselves to be a cow or ox. They will actually go over to pastures, get down on all fours, and eat grass. Clinical Lycanthropy involves people who think they are turning into werewolves. These people see their bodies covered with hair and their fingernails and teeth elongated. These two conditions are cases of the broader disease called Zoanthropy where people believe themselves to have turned into various animals. Some of these conditions are associated with diagnosed mental diseases such as schizophrenia, bipolar disorder, or severe depression. So, what does the information I presented above reveal about ourselves and our brains? Most people accept that the brain plays a key role in movement. Individuals who break their spinal columns may be unable to move their limbs. I have posted a video of the time I had Bell’s Palsy where a malfunction in a nerve paralyzed half of my face. Similarly, most people accept the role that the brain plays in processes such as learning, perception, and memory, and there are many examples of accidents or diseases that have led to impairment of these processes. Nevertheless, despite the acceptance of the role of the brain in determining the processes described above, many people believe the human mind is something special. These people believe that there is something else, whether you call it soul, spirit, essence, or any other such term, that is responsible for the most fundamental aspects of the human mind which they believe cannot just merely be produced by a bunch or nerve cells. However, the pathologies or conditions I have listed in this post and others indicate to us the importance of the brain not just in determining obvious things like movement, learning, perception, and memory, but also in determining things that lie at the very core of our humanity such as who we are, how we see ourselves, and what we believe in. If a bunch of nerve cells can create movement, learning, perception, and memory, why can’t they also determine our very nature? Much in the same way that the intestines can create digestion, why can’t the brain create the mind? Of course, science cannot say anything about the existence of a soul, essence, or spirit, but what we can say at this moment is that all the evidence we have so far indicates that everything that you are seems to be nothing more than the result of millions of nerve cells in your head communicating with each other in different patterns and at different times. Boggles the mind, eh? The image is from Pixabay is free for commercial use.  In this blog I have pointed out that there is a progression in emerging fields of scientific inquiry where competing theories are evaluated, those that do not fit the evidence fall out of favor, and scientists coalesce around a unifying theory that better explains the phenomena they are studying. However, even as a new theory that better fits the available data is accepted in the field, there are individuals who contest the newfound wisdom. Instead of accepting the prevailing thinking, these individuals buck the trend, think outside the box, and propose new ways of interpreting the data. I have referred generically to individuals belonging to this group of scientists that “swim against the current” as “The Unreasonable Men”, after George Bernard Shaw’s famous quote, and I have stated that science must be defended from them. The reason is that science is a very conservative enterprise that gives preeminence to what is established. Science can’t move forward efficiently if time and resources are constantly diluted pursuing a multiplicity of seemingly farfetched ideas. However, this is not to say that the unreasonable man should not be heard. There are exceptional individuals out there who have revolutionary ideas that can greatly benefit science, but there is a time for them to be heard. One such time is when the current theory fails to live up to expectations. I am writing this post because such a time may have come to the field of science that studies Alzheimer’s disease (AD). Alzheimer’s disease is a devastating dementia that currently afflicts 6 million Americans. The disease mostly afflicts older people, but as life expectancy keeps increasing, the number of people afflicted with AD is projected to rise to 14 million by 2050. The disease is characterized by the accumulation of certain structures in the brain. Chief among these structures are the amyloid plaques, which are made up of a protein called “beta-amyloid”. The current theory of AD pathology holds that it is primarily the accumulation of these plaques, or more specifically their precursors, which is responsible for the pathology. Therefore, it follows that a decrease in the number of plaques should be able to alleviate or slow down the disease. This has been the paradigm that pharmaceutical companies have pursued for the past few decades in their quest to treat AD. Unfortunately, this approach hasn’t worked. For the past 15 years or so, every single therapy aimed at reducing the amount of beta-amyloid in the brain has led to largely negative results. In fact, some patients whose brains had been cleared of the amyloid deposits nevertheless went on to die from the disease. Several arguments have been put forward to explain these failures. One of them is the heterogeneity in the patient population. Individuals that have AD often have other ailments that may mask positive effects of a drug. According to this argument, performing a trial with patients that have been carefully selected stands a greater chance of yielding positive results. Another argument is the notion that many past drug failures have occurred because the patient population on which they were tested was made up of individuals with advanced disease. According to this argument, drugs will work better with early-stage AD patients that have not yet accumulated a lot of damage to their brains. Even though many researchers still have hopes that modifications to clinical trials like those suggested above will have the desired effect as predicted by the amyloid theory, an increasing number of investigators are considering the possibility that this theory is more incomplete that they had anticipated and are willing to listen to new ideas and open their minds to the unreasonable man.  One example of these men is Robert Moir. For several years he has been promoting a very interesting but unorthodox theory of AD and getting a lot of flak for it. He dubs his hypothesis “The antimicrobial protection hypothesis of Alzheimer’s disease”. According to Dr. Moir, the infection of the brain by a pathogen or other pathological events triggers a dysregulated, prolonged, and sustained inflammatory response that is the main damage-causing mechanism in AD. In this hypothesis, the production and accumulation of the amyloid protein by the brain is actually a defense mechanism! Dr. Moir agrees that sustained activation of the defense response will lead to excessive accumulation of the amyloid protein and that this eventually will also have detrimental effects. However, even though reduction in amyloid protein levels may be beneficial, accumulation of the amyloid protein is but one of several pathological mechanisms. Moir stresses that the main pathological mechanism that has to be addressed by AD therapies is a sustained immune response, which over time causes brain inflammation and damage. He considers that accumulation of the amyloid protein is a downstream event, and it is known that the brain of people with AD exhibits signs of damage years before any amyloid accumulation can be detected. But much in the same way that Dr. Moir has been promoting his unconventional theory, there are many other theories proposed by others. Oxidative stress, bioenergetic defects, cerebrovascular dysfunction, insulin resistance, non-pathogen mediated inflammation, toxic substances, and even poor nutrition have been proposed as causative factors of AD. This is the big challenge that scientists face when opening their minds to the arguments of the unreasonable man: there is normally not one but many of them! So who is right? Which is the correct theory? And why should just one theory be right? Maybe there is a combination of factors that in different dosages produce not one disease but a mosaic of different flavors of the disease. And maybe the amyloid theory is not totally wrong, but just merely incomplete, and it needs to be expanded and refocused. Or maybe the beta-amyloid theory is indeed right and all that is required for success is to tweak the trial design and the patient population. Maybe, maybe, maybe… When a scientific field is beginning, or when it looks like a major theory in a given field is in need of reevaluation, there always is confusion and uncertainty. Scientists in the end will pick the explanation(s) that better fits the data and take it from there. They did that when most scientists accepted the amyloid theory and they will do it again if this theory is found wanting. The new theory that replaces the amyloid theory will not only have to explain what said theory explained, but it will also have to explain why the old theory failed and what new approach must be followed to successfully treat the disease. In the meantime, Dr. Moir’s theory, along with a few others, is the center of focus of new research evaluating alternative theories to explain what causes AD. The amyloid theory or aspects of it may still be salvageable, but in the field of AD it certainly looks like the time for the unreasonable man has come. Note: after I posted this, I became aware of an article published in the journal Science Advances that proposes a link between Alzheimer's Disease and gingivitis (an inflammation of the gums). The unreasonable men are restless out there! The image is a screen capture from a presentation by Robert Moir on the Cure Alzheimer’s Fund YouTube channel, and is used here under the legal doctrine of Fair Use.The brain image from the NIH MedlinePlus publication is in the public domain.  In his excellent 1994 book, The Astonishing Hypothesis, the late Nobel Prize winning scientist, Francis Crick (co-discovered of the structure of DNA with James Watson), put forward a hypothesis that boggles the mind. He wrote: “You, your joys and your sorrows, your memories and your ambitions, your sense of personal identity and free will, are in fact no more than the behavior of a vast assembly of nerve cells and their associated molecules.” He claimed that this hypothesis is astonishing because it is alien to the ideas of most people. This is presumably because, when it comes to our mind, we believe that there is something special about it. Clearly the mind is more than the product of the activity of billions of cells, no? Exalted emotions such as love and compassion and empathy or belief in the divinity or free will cannot just be a byproduct of chemical reactions and electrical impulses, right? But why would that be the case? Consider an organ like the intestine. It’s made up of billions of cells that cooperate to produce digestion. Most people will agree with the notion that the intestine produces digestion. So, if we can accept that the cells that make up the intestine produce digestion, why can’t we accept that the cells that make up the brain produce the mind? Let’s just touch on something simple, but that nevertheless goes to the very core of our notions of free will and consciousness. Consider an action such as performing the spontaneous motor task of moving a finger to push a button. In our minds we would expect that this and other such actions entail the following sequence of events in the order specified below: 1) We become aware (conscious) that we want to perform the action. 2) We perform the action. But what goes on in our brains even before we become aware that we want to perform the action? Many people would guess: nothing. Whatever brain activity occurs associated with the action must logically occur after we become aware that we are going to perform the action. After all, how could there possibly be nerve activity associated with an action that we are not even yet aware that we want to perform? Warning! Warning! - Insert blaring alarms and rotating red lights here - Fasten your existential seat belts because this ride is about to get bumpy! In 1983 a team of researchers led by Dr. Benjamin Libet carried out a now famous experiment to evaluate this question. The researchers recorded the electrical activity in the brains of test subjects which were asked to perform a motor task in a spontaneous fashion, and they also asked the subjects to record the time at which they became aware that they wanted to perform the task. The surprising result of the experiment was that, while the awareness of wanting to perform the task preceded the actual task as expected, the electrical cerebral activity associated with the motor task performed by the subjects preceded by several hundred milliseconds the reported awareness of wanting to perform the task! This amazing experimental result has been replicated by other researchers employing different methodologies. One study employing magnetic resonance to image brain activity stablished not only that the brain activity associated with the task is detected in some brain centers up to 7 seconds before the subject becomes aware of wanting to perform the action, but also that decisions based on choosing between 2 tasks could be predicted from the brain imaging information with an accuracy significantly above chance (60%). Delving even deeper into the brain, another group of researchers recorded electrical activity from hundreds of single neurons in the brains of several subjects performing tasks and found that these neurons changed their firing rate and were recruited to participate in generating actions more than one second before the subjects reported deciding to perform the action. The researchers could predict with 80% accuracy the impending decision to perform a task, and they concluded that volition emerges only after the firing rate of the assembly of neurons crosses a threshold.  The interpretations of these types of experimental results have triggered a debate that is still ongoing. The most unsettling interpretation is that there is no free will (i.e. your brain decides what you are going to do before you even become aware you want to do it). However, there are many critics that claim that there are technical flaws in the experiments, that the data is being overinterpreted, that the electrical activity detected is merely preparative with no significant information about the task, or that it is a stretch to extrapolate from a simple motor task to other decisions we make that are orders of magnitude more complex. In any case the question of whether free will exists is in my opinion irrelevant because our society cannot function under the premise that it doesn’t. What interests me from the point of view of the astounding hypothesis, is the possibility that the awareness of wanting to perform an action before we perform it is merely an illusion created by the brain. This notion is not farfetched. As I explained in an earlier post, the brain creates internal illusions for us that we employ to interact with reality. Colors are not “real”, what is real is the wavelength of the light that hits our eyes. What we perceive as “color” is merely an internal representation of an outside reality (wavelength). The same goes for the rest of our senses. As long as there is a correspondence between reality and what is perceived, what is perceived does not have to be a true (veridical) representation of said reality. Consider your computer screen. It allows you to create files, edit them, move them around, save them or delete them. However, the true physical (veridical) representation of what goes on in the computer hard drive when you work with files is nowhere near what you see on your screen. This is so much so, that some IT professionals refer to the computer screen as the “user illusion”. So, much in the same way that the brain creates useful illusions like colors that allow us to interact with the reality that light has wavelengths, or the computer geeks create user illusions (file icons) that allow us to interact with the hard drive, could it be that the awareness of wanting to perform actions, in other words, becoming conscious of wanting to do something, is just merely an illusion that the brain creates for the mind to operate efficiently? We are still in the infancy of attempts to answer these questions, but what is undeniable is that the evidence indicates that there is substantial brain activity taking place before we perform actions that we are not even yet aware we wish to perform, and that this brain activity contains a certain degree of information regarding the nature of these actions. As our brain imaging technology and our capacity to analyze the data gets better, will we be able to predict with certainty what decision a person will make just by examining their brain activity before they become aware they want to make the decision? It’s too early to tell, but from my vantage point it seems that so far Crick’s astonishing hypothesis is looking more and more plausible. The image of the cover of the book The Astonishing Hypothesis is copyrighted and used here under the legal doctrine of Fair Use. The Free Will image by Nick Youngson is used here under an Attribution-ShareAlike 3.0 Unported (CC BY-SA 3.0) license.  In the popular print and social media I often spot articles about the benefits of keeping an open mind. I also read how it is very important for scientists to keep an open mind. What these articles never discuss is the danger of keeping an open mind. This danger is that you will lose your power to discriminate between sound and fallacious ideas. For example, in 1917 two girls in the village of Cottingley in England took pictures of what appeared to be fairies flying and dancing around them. Among the many people fooled into believing the pictures were real was no other than the creator of Sherlock Holmes, Arthur Conan Doyle. More recent examples are the comedian and trickster extraordinaire, Andy Kaufman, who in 1984 visited a psychic surgeon to treat his cancer (he died), or the actor Dan Aykroyd, of Ghostbusters fame, who believes among other things in mediums and psychics and paranormal phenomena. This is not to say that only non-scientists fall victim to keeping their mind too open. There are many scientists of renown who have ended up accepting ideas or theories that were dubious at best, or patently false at worst. The co-discoverer (along with Darwin) of the theory of evolution, Alfred Russel Wallace, was a believer in psychic phenomena and spiritualism; and led an anti-vaccination campaign. Isaac Newton, the genius behind the laws of gravitation, believed the Bible had a code that predicted the future which he tried to decipher for many years. The Nobel Prize winning physicist William Shockley invented the transistor and revolutionized society, but he also defended theories that proposed the intellectual inferiority of some races. Linus Pauling, a Nobel Prize winning chemist, advocated the use of vitamin C to cure cancer despite the evidence against it. Lynn Margulis, winner of the National Medal of Science, revolutionized the theory of evolution with the concept of endosymbiosis which postulates that mitochondria and chloroplasts originated from bacteria. She also championed several fringe theories, and joined the 911 conspiracy movement that claims that it was a false flag operation to justify the wars in Iraq and Afghanistan. The irony is that Margulis had been married to that great skeptic, the late astronomer Carl Sagan. Kari Mullis won the Nobel Prize for the polymerase chain reaction (PCR), a technique which ushered a revolution in areas ranging from medicine to forensics. Not only is he an AIDs denialist along with Peter Duesberg (see below), but he denies climate change and accepts astrology. It is important to understand that the dangers of keeping an open mind have consequences that go beyond mere public ridicule. When people in positions of eminence are swayed by erroneous ideas, this can have a negative effect on society. Consider the brilliant scientist Peter Duesberg. He performed pioneering work in how viruses can cause cancer, but he was convinced that the HIV virus did not cause AIDS. His advocacy for this idea influenced the South African president Thabo Mbeki who delayed the introduction of anti-AIDS drugs into South Africa leading to hundreds of thousands of preventable deaths. In scientific research keeping an open mind is a quandary that involves navigating between making two types of errors. The first is that a mind that is too closed will reject things as false when they are really true. The second is that a mind that is too open will accept things as true when they are really false. The intuitive way to deal with this quandary is to try to strike a balance between the extremes. However, this is not how most scientists approach the issue. Science tends to be conservative in that it gives more importance to what has already been proven. Scientists view with skepticism those trying to subvert established science. The bar is set very high for the acceptance of new ideas. Most scientists view rejecting something as false when it’s really true as a lesser evil compared to accepting something as true when it’s really false. In the end, however, it will be the evidence and its reproducibility which will make the difference.  On the other hand, in the pseudosciences and the paranormal, the advice of keeping an open mind is often dispensed by those advocating for the existence of psychic phenomena, extrasensory perception, demonic possession, ghosts, telepathy, alien abductions, clairvoyance, mediums, astrology, witches, reincarnation, telekinesis, telepathy, faith healing, and many other fantastical claims. I want to suggest that, as a first step, the safest frame of mind when considering these claims is to vanish the open mind, and assume that the persons making the extraordinary claims are at best deluding themselves, and at worst liars and cheats. This suggestion may scandalize many people, and may come across as an incredibly narrow-minded and unfair approach to investigating anything. How can you find if something is true if you are prejudiced against the possibility that it’s true? The answer is that in this fringe you are dealing with events that, in principle, run counter to well-established scientific laws, or against mountains of evidence. In other words, you are dealing with the impossible. By definition the impossible is not possible and should be treated as such. When considering these claims, if you keep an open mind, you have often lost the battle. This painful lesson has been learned by many scientists that investigated fantastical claims with an open mind just to be fooled by tricks so basic that they would make seasoned magicians roll their eyes (incidentally, this is also why it is always advisable to have a magician as a consultant when investigating these claims). Scientists are the worst possible individuals to rely upon when attempting the investigation of fantastical claims. Scientists are trained to deal with nature, and nature operates based on a fixed set of rules. Natural phenomena don’t change to prevent you from studying them. Nature doesn’t cheat, lie, or delude itself. An open mind is justified only when studying natural phenomena. An open mind in any other setting is a liability. Once you have ruled out trickery and self-delusion and stablished that what you are studying is indeed a natural phenomenon, then you can consider opening your mind to the possibility that it is true. Individuals ranging from common folk to Nobel Prize winners should always remember that if you keep your mind too open, people will dump a lot of trash in it. The image is a scan of the original Cottingley Fairy pictures and is in the public domain in the United States. The open mind image by ElisaRiva is used here under a CC0 1.0 Universal (CC0 1.0) license. Many people feel…well…“different”. They somehow believe that “something” is guiding them, edging them on to a future of significance. And this belief is reinforced by their life history. Maybe they narrowly avoided getting killed in an accident or in a situation of conflict, and maybe even not once but several times. Maybe they got a remarkable job, or a position, or a promotion that they thought they had no chance of getting. Maybe they surprised themselves by achieving something that many others could not achieve. They suspect that there is more than luck involved in their achievements or near misses. Clearly some greater force (call it Providence, fate, or whatever) has chosen them and is protecting them in order for them to fulfil something in the future. There are many examples of such individuals.  Consider one of the most dramatic examples, Winston Churchill, who was Prime Minister of Great Britain during one of the most difficult periods in its history. From childhood to adulthood he survived so many diseases, accidents, and wartime situations that could have ended his life, that he developed the belief that he had been chosen for great things. This was seemingly proven true when Churchill, against nearly insurmountable odds, led Britain to victory during World War II. Could Churchill’s survival have been due to chance, or is there a deity, or force, or entity that protects and propels some individuals to overcome adversity and guide their people in times of trouble? This is not a scientific question, but we can certainly consider whether this proposition has some internal consistency.  Consider the diametrical opposite of Winston Churchill, Adolph Hitler. He not only survived four years of military service during the First World War, but he also survived many assassination attempts and persevered against all odds to become Chancellor of Germany. As did Winston Churchill, Hitler believed he had been chosen by providence to achieve great things, however, the world considers him today the epitome of evil; a deranged tyrant responsible directly and indirectly for the death of millions. How is the miraculous survival of Churchill any different from that of Hitler? If one is not fortuitous, it is hard to argue that the other is. To preserve the consistency of the proposal that a deity, force, or entity chooses, protects, and propels some individuals towards good things in times of trouble, you would have to accept that there are also deities, forces, or entities that choose, protect, and propel individuals towards bad things. So, you are left with arguing that history is some sort of grand dueling ground of deities, forces, or entities, and we are the pawns.

There is, of course, a simpler explanation: chance. There are countless individuals on this planet that face extremely trying circumstances and perish in the process or are physically and/or psychologically scarred for life. A certain proportion of these individuals survive and go on to lead average lives. A smaller proportion develops the notion that their survival and success is somehow dictated by a higher power, and this factor may become a driving force behind the choices they make in life (both good and bad). Finally, out of this last group of individuals a select few find themselves in the right place at the right time, and have the smarts, the talent, and the character to propel them to positions of power where they can influence the lives of millions of people. These are the really scary ones. When you develop the notion that you are being guided by a higher power, there is the temptation to not listen to opposing views and to see all those that oppose you as standing in the way of the fulfillment of your rightful preordained destiny. You may here be tempted to argue that you would choose Churchill over Hitler any day of the week, nonetheless consider this: in this post I presented Churchill and Hitler as polar opposites, but the truth is far more complicated. Churchill participated in, promoted, and sanctioned many despicable colonial and imperial activities of the British Empire, and he held dismal opinions of non-white cultures and individuals. In the real world, boundaries are blurry things. Science has found that our brains are programed to look for patterns, and we often can’t avoid making sense of the swirling reality around us by interpreting it within the context of our personal history and beliefs. Most of us may rationally understand the role of chance if we flip a coin ten times and obtain ten heads, but surviving ten assassination attempts is something that we may interpret in a vastly different way. Such is the complexity of the human mind, but still we must try. So please, next time that sensation of feeling special, of being chosen, of being destined for great things comes along, do humanity a favor and read a little about probability. Thanks! Churchill picture by Yousuf Karsh used here under a Creative Commons Attribution 2.0 Generic (CC BY 2.0) license. Hitler Picture, copyright by |

Details

Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed