|

4/28/2023 The Power of the Authority Figure: The Strip-Search Phone Hoax and the Milgram ExperimentRead Now I recently watched a documentary about how from 1994 to 2004 a person impersonating a police officer called dozens of fast-food restaurants in over 30 states and convinced the managers of the places to strip search one of their employees. The caller would vaguely describe an alleged female employee of the restaurant and claim she was suspected of stealing money from a customer. The manager would then bring in the employee that most closely resembled the description, and then the caller would give them the option of performing a strip search there or of being taken to the police station. The caller talked calmly, fluently, and used a very authoritative voice. He had a great command of psychology, and during the grueling sessions, which often went on for hours, he was able to manipulate otherwise decent law-abiding citizens into performing and submitting to lewd unlawful acts. The most dramatic of these events was that of Louise Ogbron, an 18-year-old employee of a Kentucky McDonalds restaurant who was strip searched by an assistant manager and her fiancé, but in her case the whole ordeal was recorded by a security camera. This video, which shocked the nation, was played in a lawsuit brought by Ogbron against McDonalds, in which she won a settlement. Whoever the caller was, he destroyed lives. Many of the managers and associated people who conducted the strip searches were fired and shunned by their communities, and some were brought to trial and convicted. Many of the women who were strip searched suffered from post-traumatic stress disorder. A man suspected of being the caller was arrested and brought to trial, but he was acquitted by a jury.

Most people are puzzled by occurrences such as these. How can average people be manipulated by a mere phone call into carrying out or enduring these acts? Why not just refuse and hang up the phone? Why not just say no to being strip searched? And this brings us to the famous Milgram experiment. In a series of experiments begun in 1961, Yale University psychologist, Stanley Milgram, researched how people react to authority figures. The subjects (all men) under study in the experiment were asked to participate in what was described as a “learning task”, which investigated the effect of punishment on learning. The task involved the subject and a confederate of the experimenter who were seemingly sorted at random into being a “teacher” or a “learner”. However, the subject was always selected as the “teacher”. The teacher and the learner were then seated in separate rooms, but they could hear each other over a microphone. The learner was allegedly connected to an electrode, and the role of the teacher was to read words out loud, which the learner was supposed to memorize. The teacher would then ask the learner to repeat the words, and if the learner failed to repeat them correctly, the teacher was supposed to deliver electric shocks of an intensity that increased with each mistake. The learner did not really receive any shocks but pretended to receive them, and he would also make mistakes on purpose. At the 75-volt level, the learner started screaming. This screaming became louder with increasing intensity of the shocks, and the learner would complain that his “heart was bothering him” as the 300-volt level was being reached. After the 300-volt level was reached, the learner went silent. As the subject (teacher) delivered shocks of increasing intensity that elicited louder screams, the experimenter would prod the subject to continue if the subject had any qualms about delivering the shocks, reassuring him that the shocks did not inflict any permanent damage and that it was necessary for the study. The results of the experiment horrified Milgram. Despite the learner’s increasingly louder screams, 65% of the subjects keep delivering shocks up to the maximum 450-volt level even after the learner went “silent” when the 300-volt level was reached. Many of the subjects experienced serious distress as a result of what they were asked to do, nonetheless a large number of them complied with the experimenter’s requests. Milgram surmised from his experiments that when prodded by a person whom people believe to be an authority figure (in this case the experimenter), many individuals will comply with their instructions even if they go against some of their strongest moral imperatives against harming fellow human beings. Other researchers at the time also repeated experiments similar to Milgram’s and obtained more or less similar results. The methodology and conclusions of Milgram have been criticized, and national experimental guidelines enacted in the seventies have rendered these types of experiments unethical, so they cannot be reproduced today. But more benign forms of the experiment have been conducted with similar results. It is because of this that some people argue that the acts performed or endured by people in the strip-search phone hoax in response to what they thought were the requests of a policeman (an authority figure) can be explained in the context of Milgram’s experimental results. The original context of the Milgram experiment was about people hurting other people when prodded by an authority figure, but I wonder if this prodding can be employed in more subtle ways. In present times, we are faced with the reality that large numbers of people have decided to forgo reason and accept misinformation, disinformation, and conspiracies such as those related to the antivaccine movement, the denial of the results of the 2020 election, or the bizarre QAnon world view. And these people have their trusted messengers whom they revere and whose utterances they accept as true. Could it be that these people view these trusted messengers as authority figures? Could it be that when these authority figures tell them to essentially disavow or ignore common sense, they somehow feel it’s OK to do it even though something inside them tells them that what they are accepting is inaccurate or wrong? I don’t know if this is true, but in view of the results of the Milgram and other similar experiments, it is certainly a possibility to consider. The image by Nick Youngson from Pix4free is used here under an Attribution-ShareAlike 3.0 Unported (CC BY-SA 3.0) license.

0 Comments

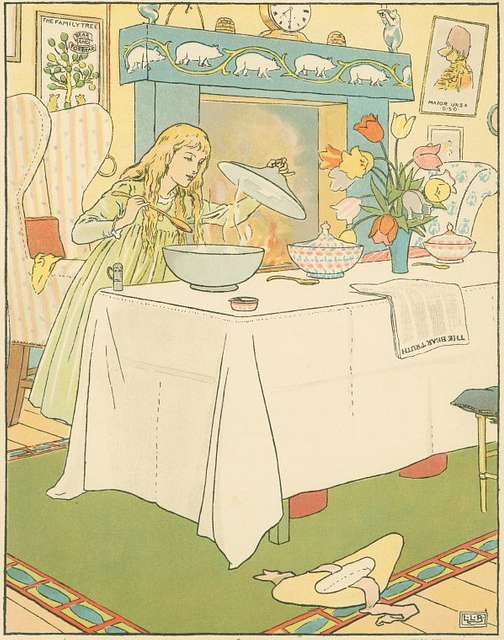

One of the accusations that I often hear nowadays is that a given person arguing for something is biased. Those who promote conspiracy theories use this very epithet against anyone who dares to criticize them, and likewise, those who dismiss conspiracy theory proponents argue that they are the ones who are biased. In the popular mind, a “bias” is a negative thing to have, and the condition of being biased is synonymous with not being able to know the truth. The “bias” label is particularly contemptuous when levied against a scientist. After all, scientists are in the business of discovering the truth about the way matter and energy behave in the world around us. How can scientists discover the truth if they are biased? In popular culture a biased scientist is as blind as a bat incapable of echolocation, and whatever science they do will not reflect reality. Let’s first address this issue by stating the obvious. We are all biased, and most of the time the biases we have are not something we have consciously chosen to have, but rather they are a consequence of the way the wiring of our brain has interacted with our particular life experience and the stimuli to which we are currently exposed. But being biased is not something that is necessarily negative. In fact, as it turns out, bias has a useful function! It allows us to simplify the complexity of our world in order to gain a measure of control over it. A bias allows us to quickly take action rather than be paralyzed by a multiplicity of seemingly equivalent alternatives. From this point of view, bias actually has a survival value and may have played a role in the successful evolution of our species. The downside of bias is, of course, that you will blind yourself to the truth. Thus, to avoid bias, some people suggest that we should keep an open mind. However, this suggestion, although well meaning, is misguided. If you keep your mind too open, people will dump a lot of trash into it. The proper way to deal with bias is not to keep an open mind. The proper way to address bias is to strike a balance; what I call “finding the Goldilocks zone”. This entails accepting that we are all biased, that we can’t help being biased, and that, in fact, a bit of bias can be a good thing, while at the same time taking steps to counter the excess bias in ourselves through thought and action. Now let me tell you how scientists do this. But before I do that, let’s state that science has a healthy inbuilt bias. Science has a bias for established science. The majority of scientists believe that accepting something as true when it is false, is a greater evil than rejecting something as false when it is true. This is because established science has at least grasped some aspects of reality. If other scientists want to replace established science with a more complete description of reality, then the burden of proof is on them. This bias for established science is needed to protect science from error. Thus, you may ask: If scientists are biased for established science, how can they discover anything new? The answer is by using anti-bias protocols. For example, scientists will analyze or score the results of some experiments in a blind fashion. This involves the person doing the scoring or the analyses not knowing the identity of the different experimental groups. In clinical trials this involves the patients not knowing which treatments they received or even both the patients and the doctors not knowing which treatment is which (double blind protocol). Scientists will also seek to reproduce each other’s observations or experimental results. If an observation or an experimental result cannot be reproduced, then it will not gain traction. Finally, some funding agencies will devote a certain amount of their resources to funding scientists with unorthodox views to promote the debate of alternative views in a scientific field. But how can non-scientists counter their biases? First of all, you have to be exposed to all the facts. For example, if you listen only to conservative or liberal media, you will not learn about some issues or views. You need to listen to the other side. However, this does not mean that you have to force yourself to listen the conspiracy-laden drivel coming out of far right or far left media. Instead try to locate a moderate news source or a news source that leans slightly towards the opposite side of the political spectrum that you favor. The idea is not to open your mind to what these news outlets have to say, but rather to become aware of the issues they are covering, why they find them important, and what their arguments are. Avoid insulating yourself and living in an “echo chamber”. Second, try to identify a person from the other side who holds views different from yours and sit down to have a talk one day. But when I say “person”, I don’t mean a nutjob who spews far-out nonsense and will engage you in a shouting match. Choose a reasonable person. There are quite a number of these out there. A rule of thumb to choose a reasonable person is to look for someone who, despite disagreements, accepts that “the other side” has made valuable contributions and is necessary to the debate. Nothing beats discussing issues with an individual who disagrees with you but also respects you. And finally, learn to identify the characteristics of bias. Sweeping generalizations, innuendo, exaggerations, hearsay, judging the many by the actions of the few, the creation of strawmen, ignoring weaknesses in arguments, not seeing the forest for the trees, and attacking the person instead of the argument are all things that signal an emotional and irrational approach to issues that is indicative of a person who is biased and therefore unable to correctly grasp reality. Ask yourself if you are exhibiting these traits when you engage in arguments. Ask others who will tell you the truth if you are exhibiting these traits. So to wrap it up, a little bias is acceptable and even healthy, but too much bias can mess up your perception of reality. When it comes to bias, try to find the Goldilocks zone. The image “Goldilocks tastes the porridge” from the New York Public Library is used here under a Creative Commons CC0 1.0 Universal Public Domain Dedication ("CCO 1.0 Dedication") license. |

Details

Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed