It’s time to lighten up my blog, so I again present you with a selection of bad science jokes and puns along with an explanation of why they are funny. Q: What type of fish is made up of two sodium atoms? A: 2Na The chemical symbol for sodium is “Na” (its Latin name is “natrium”). The joke is a play on words on how the first two letters of the name for the fish, tuna, (“tu”) sounds like the word “two”, and is followed by the chemical symbol of sodium. If you feel irrelevant, remember, YOU MATTER! Unless, of course, you are accelerated to the square of the speed of light, in which case, YOU ENERGY! The joke makes a reference to Einstein’s famous equation which states that energy is equal to mass times the square of the speed of light, and exploits the ambiguity in the meaning of the word matter as in “that which occupies space” vs “someone being significant”. A psychologist shows a patient several inkblots, and the patient replies that they all look like a couple making love. The psychologist tells the patient, “Based on the results of the test, I conclude that you seem to be obsessed with sex.” The patient retorts, “What do you mean I’m obsessed with sex? You are the one who is showing me all the dirty pictures!”

The joke is based on an actual test called the Rorschach Test, which uses inkblots. It is used by psychologists to test for thought disorders such as schizophrenia and, more controversially, to evaluate a person’s personality. Q: Why is it dangerous to make fun of a paleontologist? A: Because you will get Jurasskicked. It’s a play on words on the time period called “Jurassic”, corresponding to 200 to 150 million years ago (when dinosaurs roamed the Earth), and the slang term for being attacked and getting severely injured. A few time travel jokes. “One beer please.” A time traveler walks into a bar. I would tell you a time-travel joke, but you didn’t get it. The seminar on Time Travel will be held two weeks ago. The best thing about time travel jokes is that they never get old! A philosophy horse enters a bar and orders a beer. The bartender serves him, but notices that the horse seems sad, so he asks, “Hey, are you depressed or something?” The horse says, “I don’t think I am.” and disappears! This joke is based on the famous statement by the French philosopher, Rene Descartes, “I think, therefore I am” (in Latin: cogito, ergo sum). I should have probably mentioned this at the beginning of the joke, but I felt that would have been like putting Descartes in front of the horse. The joke is based on the play on words with the phrase “putting the cart in front of the horse” (note: Descartes is pronounced: “daykaart”). Q: What do you call places in your house that accumulate a lot of dirt and dust? A: LaGrunge Points. A play on words on “Lagrange Points”, which are areas in space around the Sun-Earth system where gravitational forces are balanced, and objects tend to remain stationary with respect to the Sun and the Earth. These points tend to accumulate a lot of space dust. A black hole is the tunnel at the end of the light. Math puns are the first sine of madness. “Sine” is a mathematical function used here as a pun for “sign”. Today in math we learned that 10 is smaller than 5! There is a mathematical function called a “factorial” which is the product of the multiplication of all the integers of a positive number. The factorial sign is denoted by the symbol “!” So the factorial of 5 (5!) is 5x4x3x2x1 which equals 120. Note: the period at the end of the sentence of the joke was left out on purpose for comic effect. Scientists have been studying the effect of cannabis on sea birds. They’ve left no tern unstoned. A play of words on “no stone unturned” and “tern” the seabird. Stoned, of course, is the slang term for being under the influence of drugs. The naïve student registered for the computer programing class using Python and Java because he thought he would look cool drinking coffee with a snake wrapped around his body. Python and Java are computer programing languages. My friend the geologist saw that I was depressed, so she said she would cheer me up with ten geology puns. She asked, “Are you having a gneiss day?”. I closed my eyes and groaned. My friend stated, “Well, anyone can have a bad day - schist happens.” I placed my hands on my head and said, “Aaaahhh.” She continued, “Come on, cheer up, let’s watch a movie. We can see Pyrites of the Caribbean.” I rolled my eyes, and said, “Look, I don’t particularly like geology.” My friend replied, “Of quartz you like geology. It’s just that you don’t take it for granite.” I shook my head and buried my face in my hands. My friend said, “I could also just cook a meal for you. Or have you lost your apatite?” I said, “Please stop it.” She added, “Or I could take you shopping for one of those marble-ous pendants made out of volcanic rock. They are guaranteed to cheer you up you know, because igneous is bliss, and they are on shale.” “Look.” I yelled raising my hands. “This is not working.” She retorted, “Oh, so if my puns are not working, what then? Do you expect me to gravel at your feet?”. I said, “I appreciate your effort to try to cheer me up with your ten geology puns but…” My friend interrupted me and said, “You mean no pun in ten did?” Me: Ha, ha, ha… To my knowledge these jokes and puns are not copyrighted. If you hold the copyright to any of these jokes or puns, please let me know and I will acknowledge it. Image by Perlenmuschel from Pixabay is free for commercial use and was modified.

0 Comments

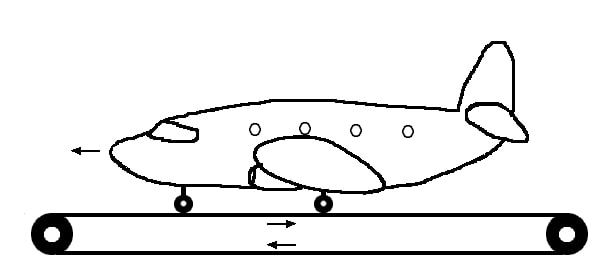

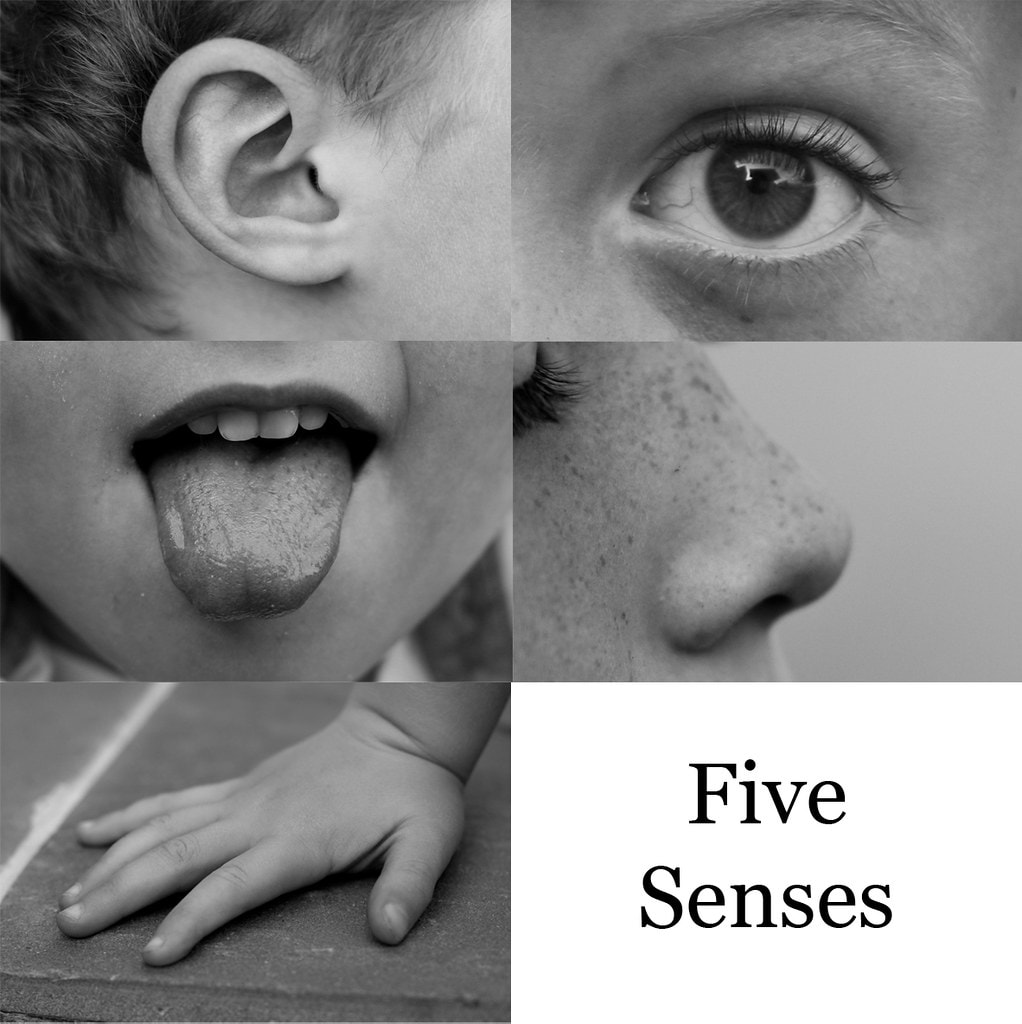

I have dealt with antivaxxers several times in my blog, and I have made my position clear. All the evidence we have indicates that vaccines work and have saved many lives, including the COVID-19 vaccines. I consider that by promoting vaccine hesitancy or avoidance, antivaxxers are harming and even killing people who delay or forfeit potentially life-saving vaccines. Some of the antivaxxers I’ve butted heads with in social media seem to be individuals that, no matter how misguided, are convinced of their position. These individuals respond to my criticism and engage in debates. However, others are nothing but opportunists who are not interested in countering rebuttals of their position. These characters just traffic in likes and shares of what they post, and in the number of comments they receive regardless of their nature. They don’t necessarily believe in antivaxxer arguments, they just use them to grow their accounts, which have tens to hundreds of thousands of followers. In between these two types of antivaxxers is a third kind who is halfway between the previous two. The accounts of these characters have a few hundreds to thousands of followers, and they are very active posting, liking, and sharing vaccine misinformation. They will occasionally respond to criticism, but they will seldom carry on an argument for more than one or two posts. Most importantly, I’ve noticed that they coordinate to like and share each other’s posts, and what they write at times seems to have an odd grammatical structure and choice of words, as though their first language were not English. I have been told that these accounts belong to foreign operatives who are promoting antivaxxer sentiment in the United States and other things to disrupt our society. The concept is not so farfetched as we know for a fact that Russian operatives posted on social media to influence our elections and create chaos. But without specific proof, this is just another conspiracy theory. As many of those who follow my posts know, I have also criticized those who spread conspiracy theories, but what is often lost in the heat of the arguments is that I am talking about unfounded conspiracy theories. I accept the fact that there have been conspiracies promoted by the government or other organizations, and I accept that there may be such activities currently going on, but I have always stressed that you need to have evidence to correctly identify them as such before claiming that there is a conspiracy. Acting otherwise is irresponsible. It is with this in mind that I was surprised to learn about a conspiracy by the US government to spread antivaxxer messages! At the start of the COVID-19 pandemic, China engaged in the spreading of propaganda claiming that the COVID-19 virus came from the United States. Then, in order to increase their influence in other countries, China supplied the COVID-19 vaccine they developed and other things to the Philippines and nations in central Asia and the Middle East that still did not have access to the vaccines made in the United States (a move known as “vaccine diplomacy”). To retaliate for this and counter Chinese influence, the Trump administration authorized a clandestine psychological operation to be carried out by the military in which, using hundreds of fake social media accounts that ended up accumulating altogether tens of thousands of followers, they fanned antivaxxer sentiment in those countries and spread misinformation about the Chinese vaccine. The accounts posted suggestive things such as that the virus came from China and the vaccine too, that the vaccine from China was fake, that it might be a rat killer, and that it contained pork gelatin (this was targeted to Muslim countries). This was at the time that tens of thousands of people were dying each day as a result of COVID-19. After the Biden administration came into power, this particular disinformation program was shut down. The above is not an unfounded conspiracy. We know it is true because the news organization Reuters which broke the story conducted interviews with contractors working for the US army, retired and active US officials, academic researchers and social media analysts, and reviewed data regarding fake social media accounts used by the U.S. military. I have been actively countering antivaxxers and promoting vaccines for years, so I am appalled that our own government participated in spreading these messages. Now, let me make it clear that I am not ignorant about the cutthroat environment of international politics. China is a dictatorship where there is no freedom of the press. People in China who try to investigate any alleged foreign psychological operations carried out by Chinese military operatives will end up in jail. The reason why Reuters was able to discover what the U.S. military was doing, and the reason we learned about it, is because of the freedoms we enjoy in this country. I could not have written this post if it were not for those freedoms. Therefore, I understand that the United States has to fight against governments such as the Chinese in several fronts, including the psychological realm, to counter its influence. However, I don’t think the way to do it is to spread antivaxxer messages or misinformation about a vaccine. And in thinking like this I am not alone. Top U.S. diplomats in Southeast Asia strongly objected to the anti-vax campaign, but they were overruled. Reuters quotes one American military official saying, “We weren’t looking at this from a public health perspective. We were looking at how we could drag China through the mud.” In the end, China’s vaccine diplomacy was not as successful as they expected, although probably not due to the psychological operation carried out by the U.S. government, but rather due to hard power tactics that China employed in many countries where they came across as an aggressor. Whether the U.S. caused damage due to its antivaxxer campaign is difficult to discern. The evidence we have indicates that the Chinese vaccine worked reasonably well against the initial variants of the COVID-19 virus. In places where only the Chinese vaccine was available, it saved lives. Therefore, anyone foregoing receiving the Chinese vaccine could have placed themselves in danger. I am not a military operative specialized in conducting psychological operations. But I would venture that any way of countering the influence of China or other countries or organizations has to take into account the well-being of foreign nationals. Instead of conducting this antivaxxer campaign, the US government could have, for example, been more proactive in sharing the COVID-19 vaccines developed in the United States with other countries from the beginning to build up goodwill towards the United States. That’s just my two cents. The unmodified image by Christian Emmer is used under an Attribution Noncomercial 4.0 international Deed (CC BY-NC 4.0). In a hearing about the coronavirus pandemic, congresswoman Marjorie Taylor Greene hounded (no pun intended) Dr. Fauci about experiments conducted with beagles which were approved by the National Institute of Allergy and Infectious Diseases during his tenure as its director. She called these experiments disgusting and evil, stated that Americans don’t pay their taxes for animals to be tortured like this, and she refused to call Dr. Fauci a doctor. This hearing was about the coronavirus pandemic, so at one-point Fauci asked puzzled, “What do dogs have to do with anything we are talking about today?”. To be fair, some of Marjorie Taylor Greene’s other questions did address matters related to the coronavirus issue, although she did not give Fauci a chance to answer them, at one point even saying “Nah, I don’t need your answer”. Nevertheless, what I want to address in this post is the issue of the beagle experiments (which has been termed “Beaglegate”) because it often receives a superficial treatment every time it is brought up. The experiments in question involved beagle dogs which were anesthetized and then placed in contact with sand flies carrying a protozoan parasite called leishmania. There are about 700 thousand to one million cases of leishmania infection worldwide each year, and in many countries where this happens, dogs are the main animal reservoir for the parasite in urban areas. How severe a leishmania infection is depends on the strain of the parasite. The most common variety of the parasite will produce a cutaneous form of the disease, which will just produce scarring at the site of infection. However, more pathogenic varieties of the parasite can produce visceral leishmaniasis, which can be lethal if left untreated in 90% of the cases among children under 5 years of age, adults over 50 years of age, or people with comorbidities or compromised immune systems. While leishmaniasis is a disease associated with developing countries, it has already made its way to Texas. Therefore, this disease if of interest to scientists and medical doctors from a public health perspective, and because dogs are often involved in the transmission of the parasite in urban environments, they have been used extensively as experimental models to study the disease (for example click on these links: 1, 2, 3, 4, and 5). So yes, dogs are used in experiments where they have to be infected with the parasite by various means, and yes, the dogs will face a certain amount of discomfort and pain, and yes, they will be euthanized at the end to obtain biological samples and to evaluate the changes the disease produces to their bodies. My question then is: what is the problem? Here many people get emotional. They would say, “The poor dogs were being “eaten” by sandflies in these experiments! This is torture! This is cruel! This is immoral! This is…etc.”. These, of course, are value judgements. As a society, we have to decide what our values are with respect to issues involving dogs. For example, in some countries it is legal to breed dogs commercially to eat them, but in the United States the Dog and Cat Meat Trade Prohibition Law of 2018 ended commercial dog meat businesses. So, if people want to ban experimentation with dogs, they should petition their elected representatives. However, as of now, it is perfectly legal to conduct experiments with dogs. Between 2007 and 2019, more than 8,000 publications in scientific journals have involved dogs, and of these, more than 5,000 have involved beagles. Experimentation with dogs has led to many discoveries and medical treatments. Dogs were employed in the experiments that led to the discovery of insulin. Research on dogs in the field of cardiology led to the first electrical defibrillator. Dogs were also used in the development of artificial heart valves. Because dogs can be bred to develop muscular dystrophy like humans, they have been used for researching this condition leading to a better genetic tests and treatments. Dogs were used in the first demonstration that cigarette smoke causes cancer, and to show that second hand smoke causes cancer too. A lot of what we know about the effects of radiation on health comes from experiments with dogs. Many new drugs are also tested on dogs before beginning clinical trials with humans. And research on dogs has also benefited dogs. Vaccines against rabies, parvovirus, and canine hepatitis were developed using dogs in the research. Research on dogs has also led to canine improved nutritional guidelines and medicines to treat dogs. Insofar as science is concerned, scientists working with dogs and other animals as experimental subjects are required to follow guidelines to ensure good animal welfare both by the institutions where they work and by the institutions which provide their funding. If they don’t, they should be sanctioned, but nobody is arguing that the experimental guidelines were not followed in the Beaglegate experiments. As to Dr. Fauci, he did not specifically and individually approve the grants for the beagle experiments. The grant review process first involves a review by a group called the Scientific Review Group made up primarily of non-federal scientists with expertise in the relevant areas of research. The grants are then reviewed by another group called the Advisory Council, which is made up of scientific and public representatives chosen for their expertise, interest, and activity in areas of public health. The only grant applications funded are those recommended by both groups. At the end of this process, Dr. Fauci, as director of the institute, formally approved bundles of thousands of grants at a time, which he did not read or review on an individual basis. My opinion on this whole matter is that the outrage over “Beaglegate” is just another way that Fauci haters have tried to slander him. Dr. Fauci has saved or improved the lives of tens of millions of people with his research and his public service. After not being able to tarnish his impeccable reputation, they have resorted to exploiting the fondness of people for cute animals to smear Dr. Fauci over perfectly legal experiments that are critical for public health. The image, designed by Wannapik, is used under a non-commercial license. Today I will address a question/meme that has been around for decades, and resurfaces every now and then to create controversy. So the question/meme is: if an airplane is on top of a conveyor belt as wide and long as a runway, with such belt being designed to match the speed of the wheels of the airplane moving in the opposite direction, will it be able to take off? This question has generated multiple debates in the communities of both science and laypeople for many years. The question even inspired an attempt to test it in the television program series Mythbusters where its hosts, Jamie Hyneman and Adam Savage, found that the airplane was able to take off from a tarp that was being pulled by a vehicle in the opposite direction. However, many people claimed that this was a bogus test because the vehicle pulling the tarp did not precisely match the speed of the wheels of the airplane moving in the opposite direction. As to the original question, initially I thought the airplane would not be able to take off. I reasoned that if the conveyor belt can exactly match the speed of the wheels, the airplane would not be able to move forward to generate the wind speed necessary for the air flowing around the wings to generate lift. However, I realized that I was thinking about the wheels of an airplane much in the same way I think about the wheels of a car, and this is a mistake. Let’s compare a car vs. an airplane on a conveyor belt that moves in the direction opposite to the movement of the car/airplane matching the speed of the wheels. In order to advance, the car needs friction between the tires and the ground (traction). A car on a very slippery surface will just spin its wheels hopelessly. So by moving in the opposite direction, you can imagine that the conveyor belt essentially nullifies traction for the car. In the case of the airplane, friction between the wheels and the ground is not needed for the airplane to advance. This is why airplanes on skis can take off on runaways made out of ice and snow. The motor of the car pushes against the ground via the wheels. Its forward movement depends on the wheels and their friction with the ground. On the other hand, the engines of the airplane push against the surrounding air. Its forward movement does not depend on the wheels. The airplane’s wheels are passive – they spin freely. The conveyor belt will not slow down the plane. Although this made sense to me, I still had problems visualizing the situation, because in my mind every turn of the wheel is matched by the conveyor belt moving in the opposite direction, so how can the airplane possibly move forward? Then I realized that the conveyor belt will also make the wheels of the airplane spin due to the friction between the wheels and the belt. In which direction does the conveyor belt make the airplane’s wheels spin? If you look at the airplane from the side, say with the nose facing your left and the tail your right (wheels down, of course), the airplane will be trying to move from right to left, the conveyor belt surface will be moving from left to right, and the wheels will be spinning…counterclockwise. In other words, in the direction of the airplane’s movement! But here is the problem: when the airplane moves forward, it will also make the wheels spin in that direction. Therefore, the speed of the wheels will be a result of that caused by the airplane plus that caused by the conveyor belt. This creates a problem in the basic premise of the problem. For example, if the airplane accelerates, making the wheels spin forward at say 100 revolutions per minute (rpm), and the conveyor belt accelerates in the opposite direction to match the spin of the wheels at 100 rpm, then the combined (total) forward spin of the wheels will be 200 rpm (100 + 100). And if the conveyor belt accelerates to match that total speed (200 rpm), then the final speed of the wheels will be 300 rpm (200 + 100). So we have an impossibility. Even if the conveyor belt accelerates to infinity, it will never be able to match the total speed of the wheels because it will always add an extra amount of spin to them! Of course, if the conveyor belt moves instead in the direction of the airplane’s movement, which will make the wheels spin backwards (against the movement of the plane), it will be able to counter the spin of the wheels, which will remain stationary. But then the conveyor belt will just keep accelerating and dragging the airplane along until it takes off. Finally, if you are curious about applying my reasoning above to the case of the car, consider that the car’s wheels are not freely moving. Only the motor of the car can move the wheels. The conveyor belt moving in the opposite direction will not make the car’s wheels spin forward. It will not add an additional spin to the wheels. So in the case of the car, the conveyor belt can match the speed of the wheels. Of course, if you put the car in neutral (disengaging the wheels from the motor) and attach a jet engine to its roof, you will end up with a situation similar to that of the airplane. Although thinking this way made sense to me, I went online and found that communities of scientists had tackled this question before and conducted computer simulations. The long and short of it is that, yes, the airplane indeed will take off. Nevertheless, there would be insurmountable problems in trying to test the question in a real-world situation where a conveyor belt tries to exactly match the speed of the wheels. The conveyor belt and the wheels of the airplane would accelerate to speeds so great that it would destroy them, and the conveyor belt would move so fast that it would begin to generate a wind current against the airplane that could provide a certain amount of lift. This concludes my foray into the Airplane on a Conveyor Belt conundrum. What do you think? The drawing of the plane on the conveyor belt belongs to the author and can only be used with permission. 5/17/2024 The Emotional Perils of Child Testimonies and the Potential for Descent into MadnessRead NowBack in 1691 in the town of Salem in Massachusetts, a group of girls started exhibiting strange behaviors including altered speech, convulsions, and trance-like states. The local physician examined the girls and concluded that they were victims of witchcraft. The town’s government accepted this diagnosis and asked the girls to name their tormentors, whom they proceeded to jail and try in court for witchcraft. Salem spiraled into madness as the girls’ accusations placed hundreds of innocent people in jail with twenty of them being led to the gallows. One could look upon this case as an incident from a long-gone era when people still operated based on superstition, as science had not yet studied mental illness in its many facets. However, there are some leftovers from these times that still linger in our societies. I saw a remarkable documentary on Netflix called “The Outreau Case: A French Nightmare”. It all began in 2001 when social workers raised concerns about the behavior of the children of a family that lived in the town of Outreau in France. The children were questioned, and evidence of sexual abuse by their parents emerged. When the children were examined by phycologists, they were deemed to be credible witnesses. The prosecution of the case was carried out by a young and inexperienced magistrate who was eager to make a name for himself. After the parents were arrested, they confessed to the charges, but then the mother implicated other people in the abuse, which was in turn supported by additional testimony from the children. This was followed by more accusations and more confirmatory statements from the children, which escalated into a vicious feed-forward cycle that implicated more than 40 adults and identified dozens of more children as potential victims of abuse. Of the adults named in the testimony of the mother and the children, a dozen were arrested and held in jail for periods of one to three years. The whole affair was chronicled by French newspapers with sensationalist headlines about a vast pedophile ring which sparked outrage. In a bizarre twist, one of the persons implicated testified that he had witnessed the murder of a little girl, which fanned the flames of the scandal, but the alleged girl’s body was never found. After five years and two trials, where the mother ultimately admitted to lying when she implicated others, and where some of the children also admitted to lying or their testimonies were found to be rife with inaccuracies, all the extraneous people implicated in the crime were found not guilty. One of the defendants died while in jail, and the lives of the others were wrecked with some people being ostracized by family members, friends, and acquaintances, and losing their jobs and their businesses. At the same time, the outcome of the case sparked claims of a coverup and related conspiracies. The United States had gone through something similar during the 1980s and early 1990s with the day-care child abuse hysteria during which many day-care providers were accused and some were convicted of child abuse based on the testimonies of children. The children in these cases were taken away from their parents and repeatedly and aggressively questioned by overzealous police and social workers in ways that ended up manipulating their memories. Some children ended up testifying that they were forced to participate in orgies, and that they witnessed people partaking in satanic rituals where they killed babies and drank their blood. This led to fear-inducing headlines in newspapers across the nation that just fueled the panic. In the vast majority of the cases, the people arrested were not convicted, and those convicted had their convictions overturned, all due to lack of any evidence that corroborated the testimony of the children. The aftermath of the case left a trail of broken lives and lingering social distrust. Whether children’s testimony is more reliable than that of adults has been a very controversial topic in the psychological and legal fields. The early view that children’s testimonies are inherently more unreliable compared to those of adults has been revised in recent years. However, there is ample experimental evidence that children can be induced to recall things that did not happen or even lie when repeatedly questioned in a biased manner under coercive situations by parents or authority figures. For this reason, it is important that those questioning children follow specific forensic interviewing protocols which take into account the age and language capabilities of the children. This is also important because real pedophiles in their defense will poke holes in the testimony of children if it was obtained inappropriately, which could allow them to go free. The Outreau case involved an overzealous magistrate who was willing to accept the accusations made by the mother of the children, who in turn was willing to keep telling him what he wanted to hear. The day-care sex abuse hysteria in the United States involved prosecutors and other individuals determined to find what they were looking for even if they had to coerce it out of the children. However, one of the greatest problems I see in these cases, at least in the initial phases where arrest warrants are issued and suspects are indicted and jailed awaiting trial, is the uncritical acceptance of the testimony of children. In our societies child abuse is a very emotional topic, and children are often viewed as paragons of innocence. When a child upon being questioned seems to provide evidence that implicates an adult in wrongful conduct, the visceral emotional reaction of many people favors the uncritical acceptance of the child’s testimony regardless of its context, how it was obtained, or whether additional corroborating evidence is present. A recent resurgence of the emotionalism characteristic of this mindset was the QAnon phenomenon of a few years ago when millions of people ended up believing that there is a worldwide cabal of satanic cannibalistic pedophiles that includes prominent political and entertainment celebrities. I debunked all of the QAnon claims and even engaged several of these individuals through social media, but it was pointless. As soon as you questioned their claims, they would accuse you of being part of the conspiracy! Now imagine if QAnon adherents had been in a position to fully wield the power of the judicial branch of our government. From Salem to Outreau, the day-care child abuse hysteria, and QAnon, the potential for descent into madness over the emotional issue of child abuse is still ever present in our societies, and now it can be easily amplified many times by the far-reaching power of the media. Thankfully, we have past experience and scientific studies to guide us in implementing the proper methods to deal with child witnesses and to identify testimony that has been wrongfully obtained or which is unsupported by evidence. The child abuse image by Nick Youngson from Pix4free is used here under a CC BY-SA 3.0 license. My family and I drove to the house of some relatives in in the town of Nashville, located in Brown County, Indiana, to watch this year’s solar eclipse. The town was humming with preparations for the event, and local vendors had eclipse-themed sales and promotions which they were offering to locals and visitors, along with eclipse-related humor. We planned to watch the eclipse from our relative’s house, and we brought our eclipse glasses along with eclipse filters for our iPhones. At the expected time, the disk of the sun began to be covered by the moon to a progressively greater extent. There were some clouds, but they were thankfully very high in the atmosphere and did not obstruct our view of the sun significantly. At one point we tried the pinhole camera trick to see an image of the sun using a colander. As the moon covered an increasing area of the sun, it got cooler, and there was a noticeable decrease in the amount of sunlight around us. We noticed a reduction in the amount of bird songs coming from the woods, but we heard a new bird singing, the mourning dove. These birds only sing towards dawn and dusk. However, it didn’t feel like dusk. Even with most of the sun covered, it still shone brightly. And then it suddenly happened: totality! We were able to take off our eclipse glasses and look directly at the sun which looked like an annulus with a black center and a bright circumference. The horizon all around us bore an orange and yellowish tinge like a sunset or a sunrise. My relatives, who were sitting out closer to the trees (I was up on the deck), reported that they saw insects that normally only come out at night. In the distance, we heard some loud explosions. Somebody had the original idea of taking advantage of the eclipse to set off fireworks! We saw what appeared like a bright star visible close to the tip of the tree branches to our right. One of my relatives checked with the astronomical app and confirmed it was the planet Venus, which, if it were not for the eclipse, would not have been visible. And then, just as abruptly as it had arrived, the darkness went away. The insects disappeared, and the birds resumed singing. In the next hour the moon moved across the sun in the opposite direction. The 2024 eclipse was a festive event where millions of people marveled at the natural phenomenon. With a few exceptions, no one thought the eclipse was an bad omen or a an warning from God. But the reaction of people towards eclipses has not always been like this. The explanations that ancient cultures had for eclipses were rooted in superstition. Many such explanations involved the notion that some entities ranging from dragons or demons to actual animals were obscuring or biting the sun, while others equated the event with the antics of some mythological characters or with the actual sun exhibiting this behavior for one reason or another. Not surprisingly, eclipses often inspired fear in both the populace and their rulers with both engaging in rituals to ward off any dire consequences. In the most extreme cases, people were actually killed during or as a result of eclipses. And all this happened because of fear. In my book, The Gift of Science, I quoted the horror writer H. P. Lovecraft who wrote that one of the strongest emotions of humankind is fear, and that one of the strongest fears is the fear of the unknown. This type of fear is the begetter of some of the most barbaric behaviors that humanity has exhibited through history. But today we have science, and science allows us to know. We now know that an eclipse is just the moon moving across the sun and projecting a shadow on the Earth. If someone argues that the eclipse is a sign from God telling us to change our behavior, we can reply that we can predict when and where the eclipse will happen to the nearest minute and to the nearest mile. What kind of sign is that? The eclipse will happen even if we do change our behavior. And if we all change our behavior before the eclipse, after the eclipse happens another person can argue that it was a sign of God telling us that we should change our behavior to the way it was before the eclipse! Unfortunately, there are millions of people who accept the science behind eclipses but deny the science behind things such as climate change, vaccines, evolution, and even the roundness of the Earth! And while the scientific disciplines that deal with these things are different, they all use the same method (the scientific method) to discover the truth about the behavior of matter and energy in the world around us. Observations, experiments, measurements, testing of hypotheses, elaboration of theories, making predictions and testing them, refining theories, putting together an increasingly complete description of reality – this is what ALL science is about. The scientific method has allowed us to understand eclipses, climate, the immune system, the origin of species, and the curvature of the Earth. You can’t accept what one group of scientists has discovered using this method while denigrating and delegitimizing what other scientists have discovered using the same method! When we planned our trip to see the eclipse and marveled at its occurrence, we benefited from hundreds of years of research carried out by thousands of scientists. Let’s not allow ourselves to be swayed by ignorance, misinformation, and conspiracies. Let’s benefit from the work that ALL scientists have performed. The photos belong to the author and cannot be used without permission. I visited the West Virginia Penitentiary in Moundsville, West Virgnia. The prison was built in 1866 and decommissioned in 1995, and now it is used as a museum. During its 129 years of existence the prison executed 94 inmates (85 by hanging and 9 by electrocution), but close to 1,000 other inmates died due to disease and violence. The guide that took us on the tour of the penitentiary regaled us with horrific stomach-churning tales of life in prison. From a mess hall and kitchen awash with maggots, roaches, and rats, and two and sometimes three inmates housed in 5 by 7-foot cells, to corruption, riots, escapes, beatings, stabbings, shootings, suicides, torture, dismemberments, rapes, and grisly botched executions. As we walked the hallways of the penitentiary and our footsteps echoed off its walls, we could vividly imagine the suffering that its denizens experienced for many years. The West Virginia Penitentiary was once listed in the Department of Justice’s “top ten most violent correctional facilities”. One of its nicknames was “Blood Alley”. Tourism of the West Virginia Penitentiary can serve as a reminder of the history of incarceration in our country and how it is still transitioning to avoid cruel and unusual punishments and to focus on rehabilitation of inmates. But apart from that, this prison has become a hub for a different kind of interest: paranormal tourism and investigations! Due to its violent past, the prison is said to be a place where paranormal phenomena are common. It has a rich lore of both inmates and guards hearing “voices” in empty rooms or seeing “shadows” or other apparitions walking the halls. Besides its regular tours, the West Virginia Penitentiary offers guided and unguided paranormal tours such as “Public Ghost Hunt”, “North Walk”, and “Thriller Thursdays”. There also are events with actors such as “The Dungeon”, and an escape room experience: “Escape the Pen: The Execution”. Paranormal investigators with their gear can also pay to stay overnight in the most haunted areas of the prison, and the prison hosts paranormal conferences with guests, speakers, vendors, music, and concessions. The prison has been featured in many programs and documentaries. When we asked our guide if he had seen any ghosts during his walks through the prison, he replied in a very matter of fact way that he has been occasionally prodded, poked, and scratched, or has had his cap swatted from his head when there was no one around, but that he has learned to ignore these events. He figured out that these are the things ghost do, so he doesn’t mind them. So what’s a scientist (that would me me) to make of this? Well, the question is whether you regard the paranormal as a belief or as a physical phenomenon. If it is a belief, then you accept the paranormal as a matter of faith, and that’s the end of that. But if you claim it’s a real physical phenomenon, you have to produce reproducible evidence of high quality if you expect others to accept it. For example, when you eat and the glucose level in your blood goes up, your pancreas produces the hormone insulin, which increases glucose uptake and metabolism by your organs, which brings the levels of the sugar in the blood back down. You can measure insulin and glucose concentrations in the blood. You can measure insulin release by the pancreas in response to glucose. You can show that insulin triggers glucose uptake and metabolism. You can work with isolated cells, or organs, or whole lab animals, or quantify the process in human beings. Many scientists from different cultures, ethnic backgrounds, and political and social persuasions, using different sources of funding and methodology, have obtained this result. This is a real physical phenomenon. It is considered settled science. The problem with the paranormal is that evidence of such quality is lacking. The discipline of the investigation of paranormal events has not unified behind a leading theory or even a set of hypotheses to explain paranormal phenomena within the framework of modern science, and despite decades of “research” by thousands of individuals, paranormal investigation has yet to produce one battle-tested reproducible result that we can all accept as real. The reasons for this are many, and include a multiplicity of criteria regarding what constitutes a paranormal event, and how and when to detect it. Paranormal investigators who keep churning out testimonials, photos, videos, and sound recordings obtained in uncontrolled environments and in a fashion which does not rule out alternative explanations, will never convince any scientists. This is even more true today when there are many sophisticated methods of altering photographs, videos, and recordings. But there is an even bigger problem with the whole paranormal research field. The West Virginia Penitentiary has over 100,000 paying visitors a year, which represents a sizeable source of income, and many of these visitors are drawn there not merely by the history of the prison, but by its ghostly reputation. The truth is that ghosts, or the possibility that they exist, sells. Paranormal investigators who produce videos or programs must provide their viewers what they want to see if they wish to earn any income. If they produce a string of videos or programs where they come up empty handed, their viewers will get bored and leave. If people are paying to see ghosts, you have to entertain them, you have to give them ghosts, or at least the impression that they may have felt, or seen, or heard something resembling the evidence for one. With the commercial pressures to sell the existence of ghosts and the potential for self-delusion, dishonesty, and hoaxes, I am afraid that nothing short of capturing an actual ghost or finding one that appears or at least performs its antics regularly in the same place at the time and/or date (and can thus be studied reliably) will convince scientists that ghosts are real. Paranormal investigators may balk at these requirements calling them unfair, but I think that this is the high bar they need to clear to have their findings regarded as real. The photographs belong to the author and can only be used with permission. Part of the information presented here was obtained from the book: Images of America West Virginia Penitentiary by Jonathan D. Clemins published in 2010 by Arcadia Publishing. Many people decry science-based government regulation of medical products, cures, or devices as an intrusion on the freedom of the people to make up their own minds on the effectiveness of such things. In this age of heightened skepticism of government and scientists, this question is once again relevant. Why should government and scientists be the ultimate arbiters of what you can sell to people or what products they can buy and use? Why not leave it up to the consumers themselves? With this question in mind, I visited the Museum of Quackery and Medical Frauds. This museum is located within the Science Museum of Minesota as an exhibit called “Weighing the Evidence”. The exhibit contains many bogus therapies and medical devices. In this essay, I will mention some. An interesting pseudoscience featured in the museum is Phrenology. Phrenologists stated that distinct areas of the brain were responsible for an individual’s cognitive functions, and then they claimed that by measuring the shape of a person’s skull they could gain insight into the extent to which these brain areas underlying the skull determined a person’s behavior and cognitive abilities. The central assumption of phrenology was that any area having a dominant effect in the personality would result in the skull over that area exhibiting a bump, which they would measure. Phrenology has been debunked by scientists. The phrenology areas of the brain do not correlate to brain function, and the bumps in a person’s skull do not correlate to brain shape. Regardless, phrenology was very popular from the mid-1800s and into the first third of the 20th century, and was used for things such as defending and treating criminals, evaluating a parent’s love for a child, and matching people for marriage. Whereas most phenologist relied on palpation, measuring tapes, or calipers to assess the “bumpiness” of the skull, others used more sophisticated equipment such as the psychograph. The museum has one such device invented and patented by businessman Henry C. Lavery which could measure a person’s skull and rate 32 different mental faculties from deficient to very superior. Needless to say, this is all bunk. One picturesque character featured in the museum is Dr. John Harvey Kellogg. Kellogg was a bona fide surgeon and is most known as the inventor of the corn flake. Kellogg was also the staff physician of one of the most popular medical spas of the early 20th century, Battle creek Sanitarium. At this site he subjected his patients to all sorts of therapies that have not been validated by science such as electrotherapy, hydrotherapy, mechanotherapy, phototherapy, thermotherapy, and others. Kellogg was also a great believer in using vibrations because, according to him, they improved health and relieved constipation. To this end, he invented a vibratory chair that vibrated 60 times per second and of which a working model is featured in the museum. Again, there is no evidence that this therapy works. There are many other bogus products and therapies featured in the museum ranging from tonics, magnets, and vibratory belts, to radio waves and lights of different colors. You may chuckle at some of the crazy claims, therapies, and devices featured in the museum, but it can be argued that at most they made people waste their money, and if the people liked them, that’s their business. The problem is that some therapies actually killed patients, and at the beginning of the 20th century the US government had limited authority to protect consumers. For example, before the deleterious effects of the radioactive element radium became widely known, it began to be marketed as an all-natural enhancer of health that would restore vigor, improve sex life, and cure many diseases. Radium was included in a wide array of products ranging from chocolates and suppositories to toothpaste and dressings. This led to many people suffering from radiation poisoning and cancer. One famous case was the radium girls. These were women working at factories painting the dials of watches with radium-laced paint, which they were told was harmless. As part of their work, the women would often lick their paintbrushes to sharpen them, a practice that was encouraged by their supervisors. These women developed several illnesses including widespread damage to their mandibles; a condition that became known as radium jaw. The radium girls, as they were then called, sued their employers, and the resulting trial and associated publicity led to the passing of labor safety and work compensation laws as well as the creation of the Occupational Safety and Health Administration (OSHA). Another famous case involved radium-laced water of which one particular brand was Radithor. The American industrialist and amateur golf champion Eben Byers was prescribed Radithor for an arm injury in 1927. He became a big fan of the stuff and over three years consumed 1400 bottles. Byers began losing his teeth and developed cancer in his mouth. His upper jaw and most of his lower jaw had to be removed leaving him severely disfigured, but his bones continued deteriorating and holes formed in his skull. He died in 1932 and was buried in a lead-lined coffin. His remains were exhumed in 1965 and were found to be dangerously radioactive. It has been calculated that Byers consumed more than three times the lethal dose of radium. Due to his high social profile, Byers became the poster boy for the ill-effects of radium, and its use declined thereafter. The final case that led to strong regulation of health products and therapies were the 1937 deaths of more than 100 persons, many of them children, as a result of the ingestion of Elixir of Sulfanilamide which contained the poisonous solvent diethylene glycol. Next year congress passed the Federal Food, Drug and Cosmetic Act, and as a result of this a proof of safety would be required before the release of any new drug or cosmetics. Going back to the question posed at the beginning of this post, history indicates that the average person is no match against bogus drugs or therapies cloaked in pseudoscientific mumbo jumbo backed by clever marketing campaigns. We need scientists to evaluate these things, ask questions about their safety and effectiveness, and conduct tests. We also need government to enforce the conclusions of the evaluation by the scientists. Additionally, many compounds or therapies may be safe but not effective or untested, and people want them to be labelled as such to prevent them from wasting their money. This is the function of science-based government regulation. The photo of the bottle of Radithor by Sam LaRussa is used here under a Creative Commons Attribution-ShareAlike 2.0 Generic (CC BY-SA 2.0) license. The other photographs belong to the author and cannot be used without permission.  Many years ago, I went to a staged production of Hairspray. This show was based on a 1988 movie by John Waters about a bunch of teenagers living in the city of Baltimore in the 1960s. In the staged production that we saw, the performance would stop at certain times and the author of the original film, John Waters, would speak from a lectern and tell amusing anecdotes about the movie and its actors. In the movie, the dream of the teen characters is to dance in one of the coolest shows on television, a teenage dance show called The Corny Collins Show. Due to the times, the Corny Collins Show is a segregated show where only white teens can dance except for one day a month (Negro Day) when black teens participate. The star of the movie is Tracy Turnblad, played by actress Ricky Lake. Tracy and her friends, both black and white, spearhead an effort to desegregate the Corny Collins Show. At one point, John Waters informed us that the show featured in the movie was based on a real teenage dance show called the “Buddy Deane Show”, which ran from 1957 to 1964. This show was also segregated and only allowed black dancers every other Friday. With the Civil Rights movement at its apex, the pressure to desegregate the Buddy Deane Show mounted, but the home station that ran the show was unwilling to integrate the black and white dancers, so it just cancelled it. At this point, John Waters interjected, “But who needs reality?” In his movie, Tracy and her friends succeed in integrating the Corny Collins show, and all the bigots get their comeuppance. Many years later, this is the moment of that function that I remember best. John Waters saying, “But who needs reality”. Being a scientist, I should, in principle, wince at that statement. After all, scientists are in the business of discovering reality. And we know how important reality is for human beings to live a life grounded in facts and evidence free of the shackles of ignorance and superstition. However, the truth is more complicated. The are millions of human beings in this world living in societies mired in disease, poverty, disenfranchisement, exploitation, discrimination, repression, and violence. For many of these people, the hope that their condition will improve anytime soon, when viewed from any objective point of view, is nothing but a fantasy. Yet, in these societies, individuals ranging from poets, writers, painters, and filmmakers, to political and social leaders or just regular folks, articulate and visualize fantasies that their trials will end one day, and that that their wrongs will be made right. Thus, fantasy can actually play a constructive role in our lives. Fantasy allows us to imagine a better future where good prevails over evil, and we overcome the intractable problems that burden us, to be free in that happily ever after ending, where the just are rewarded, and the wicked are punished. From fairy tales to movies such as Hairspray, fantasy can be a powerful motivator for change and a source of strength and inspiration that moves us to dream, hope, and act. But fantasy can also be a destructive force, and we all had the opportunity to witness this on January 6th of 2021 when a mob of people stormed the U.S. Capitol Building to stop the counting the electoral college votes of the American people, to harm our elected representatives, and to overturn a fair and free election. These people had been told the lie that the election was stolen, and they believed it to the extent that they were willing to risk their livelihood, their freedom, and their lives to contravene the will of the majority of their fellow Americans. I want to clarify here that I am not making a political point. All the evidence we have indicates that the 2020 election was not stolen. This is an objective description of reality. Arguing otherwise is unreasonable. Now we come to the crux of my argument, which I am presenting here as my opinion. Most fantasies have at their core grievances that are real. But what is the grievance that spawned the fantasy that led to the denial of the election results and fueled January 6th? The people involved in these activities had been told for years that there is a cabal of nefarious entities such as the elites, the deep state, the fake news media, liberal Marxists, environmentalists, atheists, LGBT people, and others who hate them and their way of life. And these entities allegedly seek to control or destroy them by several means including manipulating the laws, the schools, the elections, the government, and other things. This is the bogus grievance which has spawned the fantasy among these people that they are under attack, and thus they need to strike back and defeat those who threaten them before it is too late. And after they do so, they will usher in a new era in our republic where their way of life will be safe once again and the bad people will be punished. When this fantasy is accepted, facts, evidence, and reason become irrelevant, and trust in our institutions and their safeguards against abuse of power become non-existent. This is how a fair and free election became a “fraud” where their votes were cancelled and their candidate was denied his rightful victory. This is how a call to a protest, where they were told that if they didn’t fight like hell and show strength they wouldn’t have a country anymore, was interpreted as a directive to attack the very heart of our democracy while they risked life and limb in doing so. This is the frightening power of fantasy. When it arises out of the noblest desires of humanity for a better future, it can be a formidable constructive force, but when it arises out of fear and ignorance fed by lies and misinformation, it can become a formidable force of destruction. The United States Capitol attack collage by Aca1291 is used here under an Attribution-ShareAlike 4.0 International license. The Hairspray movie poster is used here under the doctrine of Fair Use. 10/28/2023 Do You Understand That Everything You See, Hear, Smell, Taste, and Touch is fake?Read NowI have tried to communicate a fact to my readers regarding the nature of our perception of reality in some of my blog posts, but judging from some of the replies I’ve received, I don’t seem to have gotten the point across. This may have happened because my explanation was one of several ideas I was writing about. So I’ve decided to make the perception of reality the main topic of this post. Let’s go. As the title of this post implies, what you see, hear, smell, taste, and touch is fake. What do I mean by fake? What I mean is that what you see, hear, smell, taste, and touch are not physical realities that are “out there” and are independent from you. And here let me clarify that I am not being flippant or just “expressing my opinion”. What I am telling you is a fact that is accepted by scientists and was discovered a long time ago. This is old news. How can this be? Is life then a dream or some other sort of mystic stuff? The answer is “no”. Let me explain. The brain detects the reality around us through receptors present in our eyes, ears, nose, mouth, and skin. When these receptors are triggered by outside stimuli, they generate an electric signal that travels by the nerves to our brain. So what our brain receives is not light, or sound, or smell, or taste, or tactile signals. What the brain receives is just electric signals. Once these signals arrive to the centers of the brain responsible for perception, these electric signals are filtered, organized, and integrated to CREATE perception. Did you notice that in the previous sentence I put the word “create” in caps and underlined it? Yes, the brain generates a perception for us that is very different from the physical reality present “out there” that generated the signals which were detected by the receptors in our eyes, ears, nose, mouth, and skin. Let’s see how. We see color, but color is just a brain-generated representation of the wavelength of the light rays that strike our eyes. The physical reality out there is that light rays have different wavelengths, but we don’t see wavelengths. In fact, if it were not for science, we would not know that light has a wavelike nature! What the brain does is create an internal representation of these different wavelengths where we perceive short wavelengths as purple and long wavelengths as red. With regards to hearing, it’s the same thing. The mechanical perturbations in the air around us reach our ears as compression waves, and by pretty much the same process as vision the brain generates an internal representation of these waves where we perceive short waves as high pitch sounds and the long ones as low pitch sounds. In the case of smell and taste, the receptors in our nose and mouth detect the chemical structure of compounds in the air, food, or drink, and the brain generates the different fragrances and flavors we perceive. The reality “out there” is not fragrances and flavors, but merely chemical structures. Finally, there are receptors in our skin that send the brain a signal when, for example, they detect a difference in temperature. This signal is integrated by our brain to generate the sensations of hot or cold that we experience. The reality “out there” is differences in heat content, but we perceive this as “hot” or “cold”. Something similar happens for other skin sensations such as the compression of our skin (which we perceive as pressure) or the damage to our skin (which we perceive as pain). This is why, as I stated in the title of this post, everything you perceive is fake. What you perceive is not a real (veridical) representation of the reality “outside” of you. And of course, the way our brain perceives reality also affects vastly more complicated things such as the emotions we experience, the convictions we have, or the actions we take. But, if this is true, how can we even function? The answer is because there is a correlation between the reality “out there” and our perception of it. And we know this correlation is high because life would otherwise not be possible (if you don’t recognize the edge of a cliff as you approach it, you will die). This is a situation analogous to when you work with a computer. You create and move and delete files all the time in your screen, but the physical processes taking place in your screen are very different from the physical processes that are taking place in the hard drive of the computer (so much so that some people in information technology refer to the screen as the user illusion). However, because they are correlated, it works. Thus, at the level of our basic senses (sight, hearing, smell, taste, and touch), our perception of reality although fake, is not false. So, if there is a correlation between the sensations that our brain generates and the reality “out there”, why should this even be an issue worthy of consideration? The reason is that the correlation between reality and what we perceive is not 100% percent. There are many well-known illusions that can fool our senses because they exploit this disconnect between reality and perception. But at more complex levels, there are many biases in our perception of reality that can lead us to filter said reality and distort our perception of it to the point that it becomes false. For example, many people still accept conspiracy theories such as those denying the results of the 2020 election, the effectiveness of the COVID-19 vaccines, climate change, the 911 attacks, or the moon landing. Others accept false world views such as creationism, QAnon, or the flat Eart. How our way of perceiving reality can lead to its distortion is an active area of research in scientific fields ranging from molecular neurobiology and cognitive neuroscience to psychology and economics. "Five Senses" by TheNickster is licensed under CC BY-SA 2.0. |

Details

Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed