How many times have you read about the results of studies that were claimed to be or not be statistically significant? How many times have you read that the frequency of some occurrence was found or not found to be statistically significantly above chance? It’s like if the phrase “statistically significant” is considered some sort of seal of legitimacy. If it is statistically significant, it must be true, no? And if it is not statistically significant, it must be false, right? The truth, of course, is more complex than that. Let’s address one issue that invalidates many studies regardless of whether their results are statistically significant or not: sample size. Studies conducted with a small sample size, if not carefully designed, are very prone to variability. A very big red flag is when the results of a small study are unexpected. Studies with a small sample size can produce false results either in favor or against the premise that they are addressing, although most of the time they fail to produce statistically significant results for an effect, even if the effect is real. This sample size issue is often not reported when the study is presented in the news or, if reported, it normally goes something like this:

The authors caution that this was a study with a small sample size but (there is always a “but”) if this result can be repeated in a larger study then… On the one hand, the authors acknowledge that the results of the study may be bogus due to the small sample size, but on the other hand the authors nevertheless find the results worthy enough to publicize or to acquiesce to the desire of journalists to publicize them! So, are all studies with a small sample size bad, and should be avoided? Not necessarily. Sometimes scientists perform small studies intended to give the issue being tested a “look-see”. Sometimes studies with small sample sizes are all that is possible to do due to budgetary constraints or other constraints such a working with a rare disease. Scientists also perform small studies to gain experience in order to later design larger studies. But there is another reason a study with a small sample size may be justified. Consider the following statistical joke: A scientist asks a group of 3 students to concentrate in teleporting through a solid wall. Of these 3 students one manages to accomplish this feat. Excitedly the scientist informs a colleague of the results of the experiment, but his colleague dismisses it outright arguing that with a sample size of 3 you cannot possibly obtain any statistically significant results. The premise of this joke is that one person achieving such a feat would be enough. No further sample (or even statistics for that matter) is required! This is intended to illustrate that the nature of the effect being evaluated is important. If scientists are only interested in detecting a very large effect, then a study with a small sample size may be totally justified. But you may argue that a study with a large sample size is always more desirable, no? After all, you can’t go wrong if you perform a study with a large sample size, right? Not only are studies with large sample sizes more expensive, time-consuming, and difficult to implement, but there is a seldom discussed and surprising downside to large samples sizes illustrated in the following example: A researcher spends several years performing trials to evaluate whether human subjects can mentally affect the outcome of the toss of a coin as simulated by a computer. After accumulating and analyzing hundreds of thousands of trials, the researcher finds that human subjects can influence the computer mentally to produce heads as oppose to tails with a frequency of 50.0001%, and that this effect is statistically significantly above mere chance. The problem outlined in the example above, is that using a very large sample size you can detect anything, including random background fluctuations or equipment calibration imperfections, as statistically significant. Detecting such a small effect, regardless of whether it is statistically significant or not, may not only be meaningless, but is also devoid of any practical significance. The dirty little secret of statistical analysis is that statistical significance cannot replace good judgement. Before a study, you have to ask what is the size of the effect that you want to detect and whether it would be of importance to detect an effect of that magnitude, if indeed it is present. These questions will lead to determining the correct sample size for the study, and in fact whether the study should be performed at all! To recap and answer the question posited in the title of this post: in statistics whether sample size matters or not depends on the magnitude and importance of the effect being evaluated. 1) The detection of an important small effect may require a study with a large sample size. 2) The detection of an important large effect may be achieved with a carefully designed study employing a small sample size. 3) The detection of a small effect with a large sample size may be irrelevant if the effect is not important, regardless of statistical significance. 4) The detection of an effect with a small sample size in a study not carefully designed is likely to be a happenstance occurrence, regardless of statistical significance. So next time you hear about whether something was statistically significant, inquire about sample size. Image by Nick Youngson used here under an Attribution-ShareAlike 3.0 Unported (CC BY-SA 3.0) license.

0 Comments

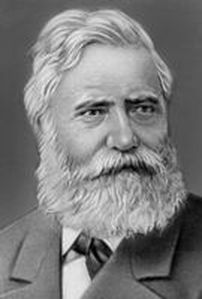

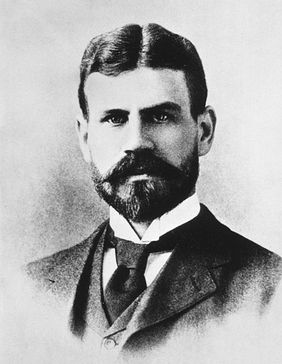

Most scientists have it easy. By this I don’t mean that science is easy, but rather that scientists experiment on animals or OTHER people. Sure, these experiments are conducted following ethical guidelines to minimize pain to laboratory animals or to ensure the safety of patients, but the point of my argument is that it’s easy to administer a treatment that makes the entity receiving said treatment sick, or that carries other risks, when that entity is not yourself. However, throughout the history of science some scientists have broken through the wall of security that separated them from their test subjects and became their own lab rats, their own patients. These scientists who experimented on themselves conducted what I call “heroic science”. Let’s look at some of these characters.  Barry Marshall Barry Marshall In a previous post, I mentioned the case of the Australian physician Dr. Barry Marshall who wanted to convince skeptical fellow scientists that ulcers were not caused by excessive stomach acid secretion due to stress, but rather by a bacteria called Helicobacter pylori. Unable to develop an animal model or to obtain funds to perform a human study, he experimented on himself by drinking a broth containing Helicobacter Pylori isolated from a patient who had developed severe gastritis. He developed the same symptoms as the patient and was able to cure himself with antibiotics. As a result of this and other studies, Dr. Marshall was awarded a Nobel Prize in 2005. Another of these heroic individuals was Werner Forssmann, who as a resident in cardiology in a German hospital wanted to try a procedure to insert a catheter through a vein all the way to the heart. Forssmann was convinced that if this could be done, it would allow doctors to diagnose and treat heart ailments. However, he could not obtain permission from his superiors to perform the experiment on a patient, so he tried it on himself. He made an incision in his arm, inserted a tube, and guiding himself with X-ray photography, he pushed the tube all the way to his heart. At the time there was a lot of opposition to Forssmann and his unorthodox methods, and although he persevered for some time, he became a pariah in the cardiology field and was forced to switch disciplines becoming a urologist. However, eventually other scientists refined the technique of catheterization described in his work, and developed it into valuable medical procedures that have saved many lives. Forssmann had the last laugh when he was awarded the Nobel Prize in 1956. Most heroic science studies didn’t lead to a Nobel Prize, but some resulted in useful information. For example, John Stapp was an air force officer who experimented on himself to test the limits of human endurance in acceleration and deceleration experiments. He would be strapped to a rocket that would rapidly accelerate to speeds of hundreds of miles per hour and then stop within seconds. As a result of these brutal experiments, Stapp suffered concussions and broke several bones, but he survived, and the knowledge generated by his research eventually resulted in technologies and guidelines that today protect both car drivers and airplane pilots. Despite its appearance of recklessness, heroic science is seldom performed in a vacuum, but rather it is performed by individuals who believe that, based on other evidence, nothing will happen to them.  Joseph Goldberger Joseph Goldberger Such was the case of Dr. Joseph Goldberger. In the early 1900s, the disease Pellagra afflicted tens of thousands of people in the United States. Dr. Joseph Goldberger performed experiments that indicated that Pellagra was a disease that arose due to a dietary deficiency rather than a germ. Faced with recalcitrant opposition to his ideas, Goldberger and his assistants injected themselves with blood from people afflicted with Pellagra and applied secretions from the patient’s noses and throats to their own. They also held “filth parties” where they swallowed capsules containing scabs obtained from the rashes that patients with Pellagra developed. None of them developed Pellagra. This along with other evidence demonstrated that Pellagra was not a disease carried by germs. Another case was that of the American surgeon Nicholas Senn, who in 1901 implanted under his skin a piece of cancerous tissue that he had just removed from a patient. As he expected, he never developed cancer. Senn did this to demonstrate that cancer is not produced by a microbe, as it is not transmissible from one human to another, although at the time there were many pieces of evidence that taken together indicated that this was the case. Of course, the mere fact that you perform heroic science doesn’t mean that you will reach the right conclusions.  Max von Pettenkofer Max von Pettenkofer Back in 1892 the German chemist Dr. Max von Pettenkofer disputed the theory that germs caused disease, and specifically that a bacterium called Vibrio cholerae caused the disease cholera. He requested a sample of cholera bacteria from one of the most prominent proponents of the theory, Dr. Robert Koch (who won the Nobel Prize in 1905), and when he got the sample he proceeded to ingest it! Pettenkofer fell slightly sick for a while, but did not develop cholera. He claimed that this proved his point, but the vast majority of the evidence generated by others indicated he was wrong, and his claim was never accepted. A rather remarkable example of misguided heroic science is the work of Doctor Stubbins Ffirth. This individual studied the incidence of Yellow Fever cases in the United States back in the 1700s and noticed that Yellow Fever was much more prevalent during the summer months. Thus he developed the notion that Yellow fever was due to the heat stress of the summer months, and that therefore it was not contagious. To prove this he embarked on a series of gross experiments where he exposed himself to the bodily fluids of Yellow Fever patients. He drank their vomit, he poured it in his eyes, he rubbed it into cuts he made in his arms, he breathed the fumes from the vomit, and he also smeared his body with urine, saliva, and blood of Yellow Fever patients. Since he never contracted the malady, he concluded that Yellow Fever was not contagious. However, not only did Ffirth employ bodily fluids from late-stage Yellow Fever patients whose disease we now know not to be contagious, but he also missed the fact that Yellow Fever is transmitted by mosquitoes (see below), which is the reason why it’s more prevalent during the summer! And finally, some of the scientists who engaged in heroic science suffered or died as a result of their experiments, but their sacrifice saved lives or resulted in advancements in the understanding of terrible diseases as the following two cases show.  Jesse Lazear Jesse Lazear In 1900 the army surgeon Walter Reed and his team in Cuba put to test the theory that Yellow fever was spread by mosquitoes, which at the time was not taken seriously by many scientists. They had mosquitoes feed on patients with Yellow Fever and then allowed the mosquitoes to bite several volunteers, among whom were two members of Reed’s team, the American physicians James Carroll and Jesse Lazear. Several of the people bitten by these mosquitoes developed Yellow Fever including Carroll and Lazear. Lazear died, but Carroll recovered, although he experienced ill health for the rest of his life. After this demonstration that Yellow Fever was transmitted by mosquitoes, a program of mosquito eradication was implemented that succeeded in dramatically reducing the cases of this disease. In the Andes in South America there is a disease called Oroya Fever that periodically decimated the population in some localities. In the 1800s many physicians suspected that this disease was connected to another condition that led to the production of skin warts (Peruvian Warts), but no one had ever demonstrated they were connected. Daniel Alcides Carrión, a student of medicine in the capital of Peru, Lima, set out to prove that these two diseases were the same. He removed a wart sample from a patient with the skin condition and inoculated himself with incisions that he made in his arms. Carrión developed the symptoms of Oroya Fever thus demonstrating that these two diseases were different stages of the same disease, which is now called Bartonellosis. Unfortunately, he died from the disease, but he is hailed as a hero in Peru.

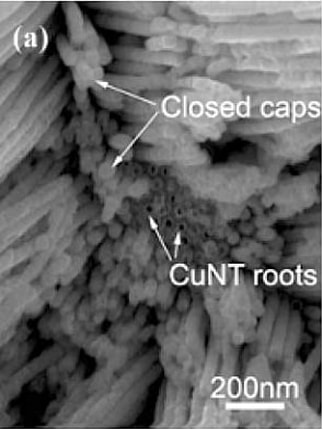

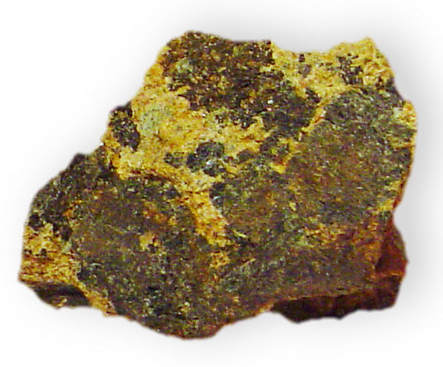

The above are but a fraction of the cases of individuals who risked life and limb performing heroic science. Many people criticize the usefulness of most cases of heroic science, especially when it just involves a sample size of “one”, and these critics have a point. In the end, heroic science should be held up to the same standards of rigor as regular science. However, whether those that experimented on themselves did it out of need to overcome bureaucratic obstacles, the belief in the correctness of their ideas, scientific curiosity, or because they were crazy, you always have a certain degree of admiration for the individuals who put their lives and health on the line for a scientific idea. They are willing to cross a line of security that most researchers wouldn’t dare to cross. The Photograph of Barry Marshall by Barjammar is in the public domain. The photograph of Dr. Goldberger made for the Centers for Disease Control and Prevention is in the public domain. The photograph of Max von Pettenkofer is in the public domain in the US. The photograph of Jesse Lazear from the United States National Library of Medicine is in the public domain. A warning to my more sensitive readers: This post contains some rude terms and juvenile humor! “Get you mind out of the gutter!” is a common exhortation used on a person who interprets things or thinks about things in indecent ways. In our culture, having your mind in the gutter is clearly considered to be something negative. However, sometimes having your mind in the gutter, or having someone around with their mind in the gutter, can actually be an advantage. How can this be? A joke in the biotech industry involves the owner of a genetics company who is thinking about the name he should give to a new branch of the company that he is planning to open in Italy. The owner innocently decides that because his company is a genetics company, and because the new branch is opening in Italy, he will call the new branch of the company "Gen-Italia". It is in situations like these where having someone around with their minds in the gutter can be useful to spot the vagaries of language and slang. The above is just a joke, but consider the true story of a company called “Custom Service Chemicals”. Back in 1964 they paid a marketing firm to suggest a new name for them. The marketing firm came up with a combination of the words “Analytical” and Technology” which perfectly described what the company was doing, and thus they changed their name to “Analytical Technologies”, which they fatefully proceeded to abbreviate to “AnalTech”. Apparently no one involved in the name-change operation had their mind in the gutter to spot the obvious problem with the new name. As a result of this, the company has been the butt of jokes (get it?) for all of its existence culminating in one memorable episode in 2017 when newspapers reported that a truck had plowed into AnalTech creating a hole which released an odor that led to a HazMat situation. The company has sometimes complained about the puerile humor it is subject to, but they get no sympathy from me!  Oh no you don't! Oh no you don't! However, the AnalTech story is nothing compared to the fiasco that beset no other than the company Pfizer. Pfizer is a pharmaceutical giant with 90,000 employees selling products in 90 international markets and generating billions of dollars in profits. This is the company that brought us penicillin and Viagra. Pfizer normally functions like a well-oiled machine when it comes to advertising their drugs, but in 2012 they experienced a major breakdown. At the time Pfizer had come up with a drug for osteoarthritis in dogs called Rimadyl, and they wanted to put together a marketing campaign to promote it. Unfortunately there was no one in their marketing team with their mind in the gutter to enlighten them. Thus it was that to encourage people to use Rimadyl on their ailing dogs, they invited their customers to “rim-a-dog”; in other words, apply Rimadyl to their canine friends. Pfizer even created a long-gone website: rimadog.com. Alas, if someone had even made a cursory search of possible slang meanings of the verb “to rim” they would have averted the catastrophe. In response to the public outcry from dog lovers, Pfizer apologized and modified their marketing of Rymadyl, but it was too late. They had already left a permanent mark in the annals (sorry, couldn’t resist using that word) of botched marketing campaigns. Sometimes what happens is that the people involved in coining a problematic name are foreign individuals who are not well acquainted with American slang. The Hungarian Nobel Prize winner Albert Szent-Györgyi discovered vitamin-C and found that paprika contained large amounts of it. This was a godsend to the depressed economy of the Hungarian town of Szeged where Szent-Györgyi resided in the early 1930s. Merchants and scientists joined forces to produce and sell a paste derived from paprika which was christened “vitapric”. Sales of the paste were so good in Europe that Szent-Györgyi and his colleagues wanted to sell vitapric in the United States. Luckily for them, someone with their mind in the gutter advised them to change the name of the product!  However, a group of Chinese researchers were not that fortunate. Dachi Yang, Guowen Meng, Shuyuan Zhang, Yufeng Hao, Xiaohong An, Qing Wei, Min Yeab and Lide Zhang discovered a method to make very small tubes (nanotubes) using metals like bismuth and copper. To name their creations they used the abbreviation for the word nanotubes “NT” preceded by the chemical symbols of bismuth (Bi) and cooper (Cu). Thus they referred to bismuth nanotubes as “BiNTs”, and cooper nanotubes as “CuNTs”. But what really cemented the fame of this Chinese group for posterity was that they wrote up the results and got them published in a mainstream English language scientific journal. Thus in their paper entitled “Electrochemical synthesis of metal and semimetal nanotube–nanowire heterojunctions and their electronic transport properties” you find no less than 12 references to “BiNT/s” and 50 references to “CuNT/s”. It seems that not a single person with their mind in the gutter was involved in the process of review and editing of the paper. Adding insult to injury, the Chinese research group received the 2007 Vulture Vulgar Acronym (VULVA) award from the British technology news and opinion website The Register. Of course, it’s not always a foreigner unacquainted with English slang who dreams up these terms. In 1972 doctors Alexander Pines, Michael Gibby, and John Waugh developed a new procedure for high resolution nuclear magnetic resonance (NMR) of rare isotopes and chemically dilute spins in solids. This was a significant breakthrough because up to that moment NMR could only be applied to liquids. But then the authors proceeded to name their new technique: Proton Enhanced Nuclear Induction Spectroscopy. The problem with this name is, of course, the acronym. These naughty scientists went on to publish scientific articles where the name of the technique was prominently displayed in the tittle. It seems that no reviewer or editor with their mind in the gutter caught on to the joke. For the sake of decency most scientists nowadays refer to the technique as “cross-polarization”. While the name for the NMR technique was intended, some rude acronyms may have just arisen unintentionally from names put forward by people with insufficiently dirty minds. Thus the short denomination for the enzyme argininosuccinate synthetase is “ASS”, the cell culture substrate poly-L-ornithine is abbreviated as “PORN”, and the muscle relaxant and murder weapon, Suxamethonium chloride, is called “Sux”. Some scientific disciplines have certain traditions or follow some methodologies that make them prone to generating silly or rude names.  Cummingtonite Cummingtonite In geology there is a custom of naming a new mineral by adding the suffix “-ite” (a word derived from Greek meaning rock) to whatever the mineral is named after. But this tradition can create problems when there is no one around with their mind in the gutter. For example, when a new mineral made up of magnesium, iron, and silicon was found in the locality of Cummington in Massachusetts it was christened “Cummingtonite”, when a new mineral made up of calcium, silicon, and carbonate was discovered in a mine named “Fuka” in Japan, it was branded “Fukalite”, and a mineral made up of silicon and aluminum was named “Dickite” in honor of chemist Allan Brugh Dick who discovered it. In chemistry, when a new chemical compound is isolated from a plant, often the name of the particular chemical form of the compound is combined with the name of the genus or the species of the plant. The problem is that several plants do not have the most innocent of names, and there seem to be either a dearth of chemists or the people who vet these names with minds in the gutter. Thus “vaginatin” was isolated from the plant Selinum vaginatum, “erectone” was isolated from the plant Hypericum erectum, “clitoriacetal” was isolated from the plant Clitoria macrophylla, “nudic acid” isolated from the plant Tricholomo nudus, and so on.

I could go on, but from all these examples you get the idea. So next time someone is chastised for having their mind in the gutter, intervene and quote a few of these examples to make the case for the usefulness for such a mind in science! I have taken some of the examples listed in this post from Paul May’s amazing webpage “Molecules with Silly Names” and combined them with my own research. Mr. May has also written an eponymous book that can be bought on Amazon. Dog image by Calyponte used here under an Attribution-Share Alike 3.0 Unported license. The image from the copper nanotube article is a screen capture used here under the legal doctrine of Fair Use. The image of Cummingtonite by Dave Dyet is in the public domain.  When people say or write things like “nothing is impossible”, they normally mean this as a motivational slogan intended to overcome life’s difficulties. They don’t literally believe that nothing is impossible, but rather that talent, focus, hard work, and dedication can overcome seemingly insurmountable obstacles to achieve success. And you know what? I’m fine with that. It may not be accurate, but I understand that people may need a little oomph in their lives. If some accuracy needs to be sacrificed to help people succeed, I am willing to look the other way, so to speak. However, some people actually believe that the maxim “nothing is impossible” is true, and not only in the realm of personal achievements, but also in the field of science. I have had a few discussions with these mystics that left me wishing that I had applied Alder’s Razor. I have tried to explain that our world functions based on a set of rules that clearly delineate what is and what isn’t possible, and that science is in the business of finding what these rules are. In response to this, I normally get a list of things that scientist thought to be impossible that were later demonstrated to be possible, along with comments like “scientific theories have been proven false again and again” and some interspersed subtle and not so subtle hints that my mind is not sufficiently open. To these criticisms, I answer that science sometimes moves forward by trial and error, and, like these critics point out, scientists have made mistakes or have underestimated the complexity of the phenomena they were studying. But, as more knowledge was generated and explanations were refined, sufficiently developed scientific theories were established that allowed accurate discrimination of the possible from the impossible. Additionally, I have already pointed out not only that the vast majority of scientific theories have not been proven false, but that there are dangers in keeping your mind too open.

Nevertheless, the more important point, that seems to be ignored by the “nothing is impossible” crowd, is that our very lives depend on knowing what is possible and impossible in the world around us. Think about it. When we walk on a cement surface, we know that it will not suddenly turn into quicksand and swallow us up. When we approach a tree, we know it will not suddenly uproot itself and attack us. When a cloud passes over us, we know it will not suddenly turn to lead, fall, and crush us. We have a very clear understanding of how cement surfaces, trees, clouds, and myriads of other things work, and we know with absolute certainty what they can and cannot do. We know what can and cannot happen. We know what is possible and what is impossible. If this understanding of how our world works were false, our lives would be in peril. But our capacity to gain this understanding is nothing new or even limited to the human species. In nature we observe that animals also gain an understanding of how their surroundings work through individual experience and from observing other animals. They develop an understanding of what is edible and what isn’t, of what prey is safe to attack and what prey is dangerous, of what places are safe to be in and which are not, and so on. There is a rhyme and reason to this understanding that animals gain. They grasp that certain things are possible and other not, and they exploit this knowledge to negotiate the complexity of their environments and survive. The animal ancestors of humans acquired this information like other animals through their individual experience and from observing others. However, as ancient humans developed the capacity to think and communicate to an extent that was orders of magnitude higher than that of their animal kin, the knowledge they derived from experience became insufficient. There were many scary things happening in the world that they did not understand and could not control like earthquakes, storms, volcanoes, droughts, pests, and disease that could dramatically affect their lives. And there were also other things like eclipses, comets, shooting stars, or the moon acquiring a red hue that were mysterious. Ancient humans were able to formulate questions. Why did these things happen? Was there something or someone making them happen? How can I prevent bad things from happening? How can I be spared? Ignorance and fear begat superstition and the belief in the supernatural in order to allow humans to make sense of their surroundings and gain a measure of control over their existence. But then some humans started investigating how the world around them worked. They observed. They experimented. They found regularities and patterns. They asked and answered questions and made predictions based on the answers, which they then refined from experience. Science was born. And science was able to deliver explanations regarding the nature and inner workings of those scary things and those mysterious things allowing us to understand them, and in many cases control them, or at least reduce their detrimental effects on our lives. Viewed from this vantage point, science is just merely a more effective way of obtaining the information that we once obtained through experience. Knowledge of what is possible and not possible is key to our survival, and science has been the most successful way in which we gave gained this information. To those who say “nothing is impossible” I just have one word: balderdash! Image from Pixabay is free for commercial use (Creative Commons CC0) |

Details

Categories

All

Archives

June 2024

|

RSS Feed

RSS Feed